Controller is a macro-term we use for tools that help us express ourselves by means of physical and sometimes non-physical (more on this later) interaction with electronic musical instruments like synthesizers, samplers, drum machines, computer software, and so on. In acoustic and electro-acoustic instruments the sound source and the elements controlling and shaping sound are often inherently integrated into the architecture of the instrument itself. For example, a piano without a keyboard is not really a piano. The sound of a violin is shaped by the position of fingers on a fingerboard, a bow, and alternatively plucking strings themselves. Amplified instruments like electric guitar are additionally equipped with tone and volume control knobs that help to shape the dynamics and timbre of the instrument.

On the contrary, a synthesizer remains a synthesizer whether it has a keyboard or any other controller attached to it or not. This is a big difference—the world of electronic instruments allows for a total decoupling of the things that make sounds and the things that we use to control how those sounds are made. While this may sound like an extra step standing between the composer/musician and their instrument, splitting the sound source and controller presents a few benefits.

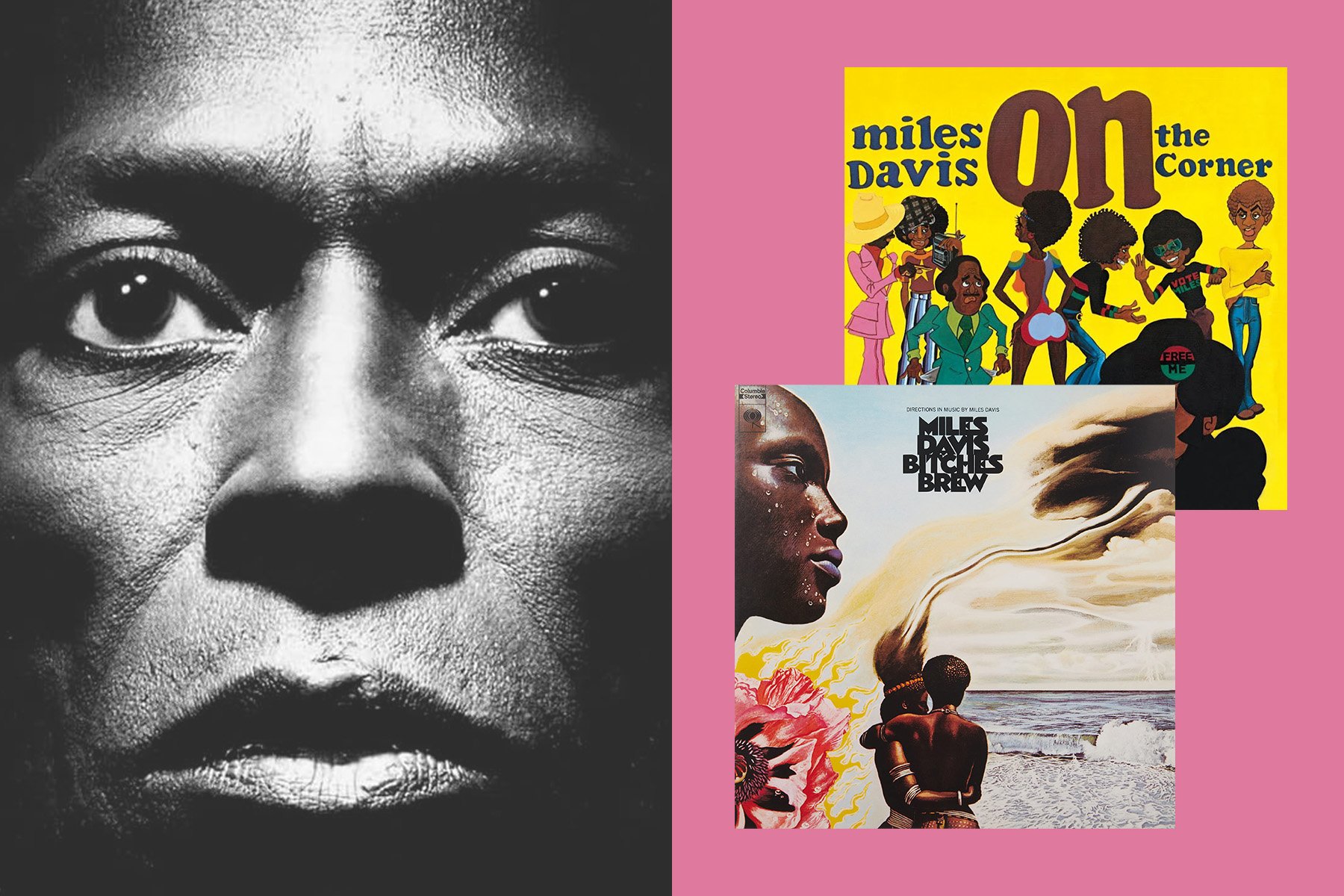

First of all, this opens up the possibilities for new forms of expression and interaction with the process of music-making, which consequently affects the way we think about music, sound, and processes of composition and performance. It is commonly said that instruments greatly influence the kind of music being created, and thus, choosing how we interact with an instrument significantly impacts what music we end up creating.

Secondly, the design of a controller can help musicians trained on a particular instrument to overcome the sonic and technical limitations of that instrument. Historically, piano players were some of the first to truly take advantage of that, as the keyboard interface was implemented early on as a standard way to control a synthesizer. But of course, over the years there have been plenty of attempts to incorporate other types of controller interfaces, some influenced by traditional instruments like guitars, drums, and woodwinds, and others taking on completely new forms.

Lastly, the field of music controller design is a great framework for tackling such difficult tasks as accessibility, at its best providing options to experience the joy of music-making to those who were deprived of it for any number of reasons.

Now that we clarified for ourselves the benefits and power of music controllers, let's dive deeper into this world, and we'll start with various existing formats that controllers may utilize.

Communication is Everything

The history of music technology has many beginnings, and consequently, many paths that led to the abundance we are lucky to witness in the present day. Through the years, the same musical and technical problems were encountered by different people, at different places, all finding different solutions, and as a result, many of those ideas manifested in the evolution of a handful of distinct ways electronic music equipment can communicate. Familiarity with those different means of communication is valuable for a deeper understanding of the world of electronic music instruments, especially when we talk or think about controllers as they act as bridges connecting our ideas and emotions to our instruments. Although it is well outside the scope of this article to get in-depth on each way of interfacing controllers and instruments, we will briefly go over what some common are.

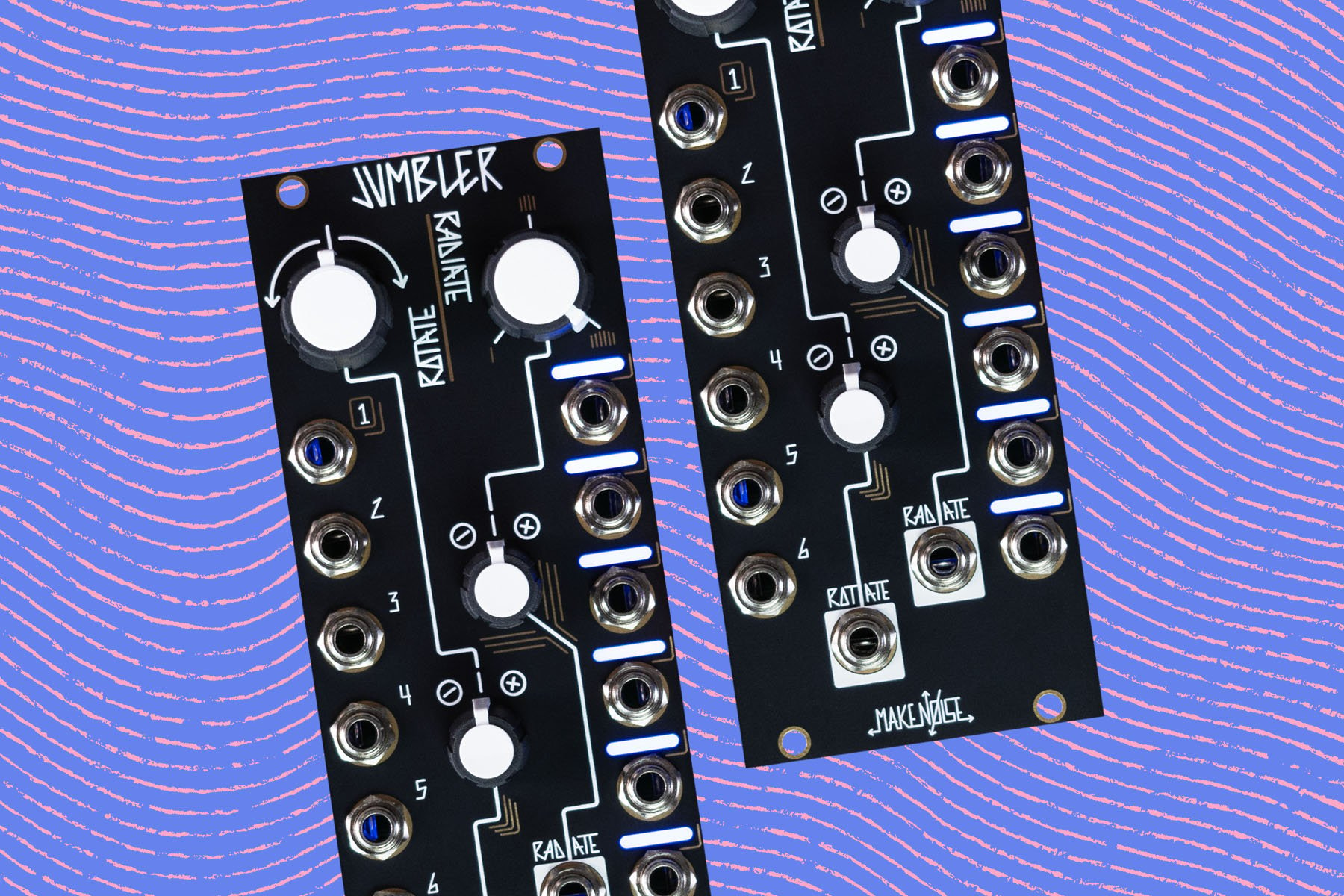

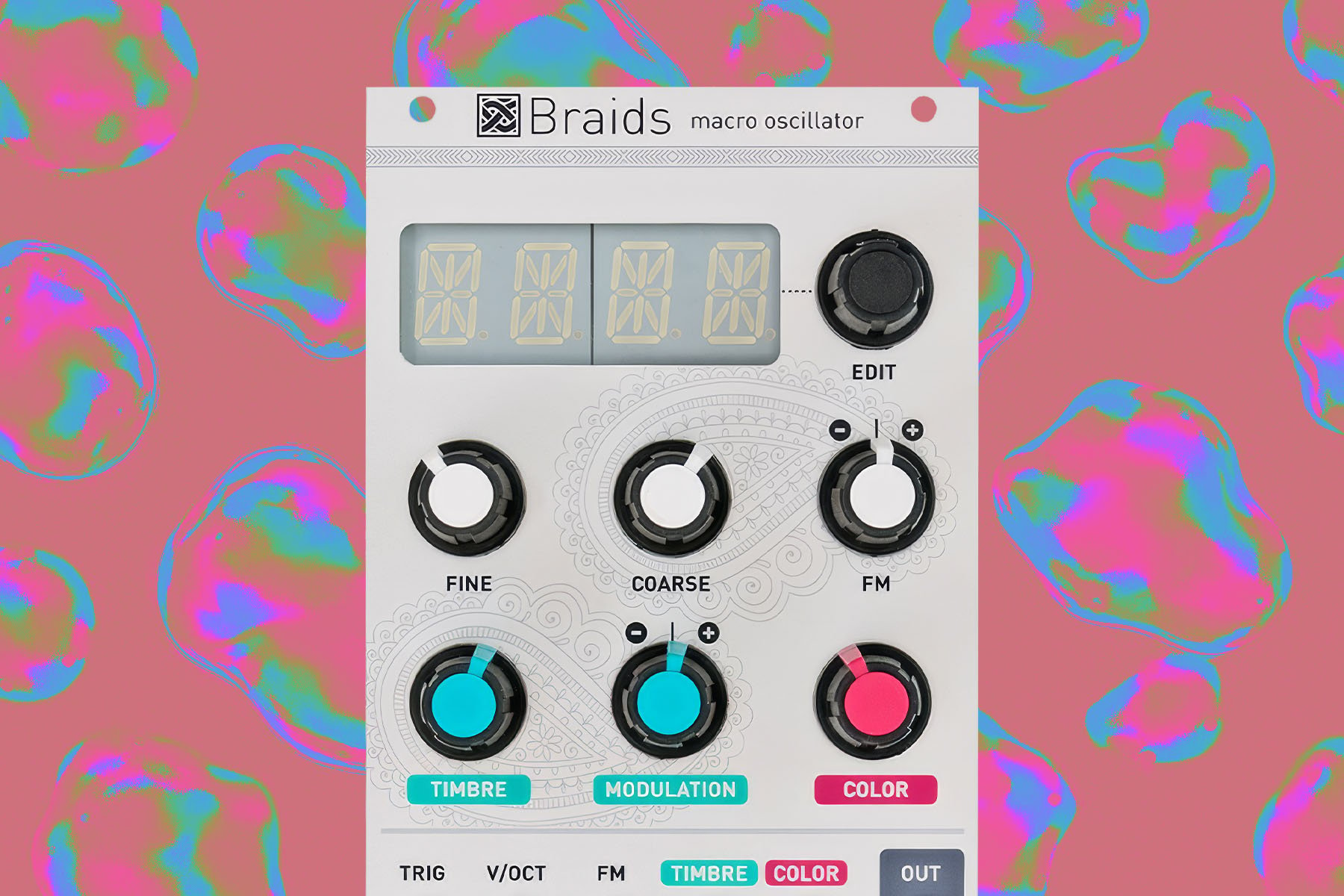

Analog aka CV/Gate or control voltage/gate is the simplest, and oldest method for connecting things. Essentially, a single cable carries a single signal from point A to point B. Just as you would connect an output of your electric guitar to the input of the amplifier, with CV/Gate you can send a voltage from, for instance, a keyboard to the frequency control input of an oscillator. The simplicity and reliability of this method made it survive to this day. It now lives happily along with other advanced digital communication protocols and it is the main method in analog modular synthesizers.

MIDI aka Musical Instrument Digital Interface is undoubtedly the most widely implemented method of sending control information between electronic instruments. Standardized in 1983, MIDI has become an expected feature on any electronic music instrument, hardware or software-based. It works by transmitting/receiving specific messages accompanied by values in the range of 0 to 127, and everything happens via one (bidirectional) or two (unidirectional) cables. Essentially, a single controller can be used to manipulate any number of the parameters on an instrument or even multiple instruments. MIDI is a great way to control your instruments, but it does have some disadvantages, namely low resolution—although that is planned to be changed with the arrival of MIDI 2.0, currently in development. Also, an extended MIDI protocol known as MPE (MIDI Polyphonic Expression) is gaining momentum, now employed in instruments and controllers such as Roger Linn's LinnStrument, Artiphon's Instrument 1, and Embodme's Erae Touch. Feel free to refer to our Brief History of MIDI , MIDI Connector Guide, and What is MPE? articles for more information.

OSC aka Open Sound Control is a network-based communication protocol. It was introduced in 2002, and it was intended as a means for sharing musical performance data between various devices like synthesizers, drum machines, computers, etc. The main advantage of OSC over MIDI is a higher resolution of data and a much more flexible messaging structure, albeit as it was mentioned earlier, MIDI still remains much more widely used...perhaps because it was implemented earlier, and so many instruments already rely on it. Nevertheless, there are plenty of musical software and hardware devices that embrace OSC, such as Max/MSP, Reaktor, Pure Data, TouchOSC, Monome devices, Audiocubes, and more.

HID aka Human Interface Device was developed to foster innovation in the realm of human-computer interaction, and specifically to simplify the process of connecting various devices to computers, mostly via USB. It is not specifically a protocol for interfacing with musical instruments, but it has been widely used to adapt all sorts of devices like web cameras, trackpads, sensors, joysticks, and other kinds of gaming controllers for music-making. Keep in mind that most musical instruments aren't equipped to process HID data directly, so the common practice is to parse the data with a computer, and only then route it to the instrument.

It is also worth mentioning that often controller interfaces are built into the design of a synthesizer, however they remain accessible for controlling other instruments via dedicated outputs, most prominently MIDI and CV/Gate.

Signal Types

Whether you decide to go all analog and use something like Make Noise Pressure Points to control your synthesizer or use the Studio MIDI Guitar from Jamstik, there are primarily two types of signals that you'll be working with: binary and continuous. By binary, I mean all signals that have just two states: off and on. This can be expressed by analog gate and trigger signals, note on and note off messages in MIDI, switches, etc.

As you would expect, continuous control signals are those that have a range of values between their minimum and maximum points. These are known as CC (continuous controller) messages in MIDI, and control voltage signals in analog equipment. These may be expressed by sliders, knobs, surface pressure, XY pads, etc.

Of course, this is a rather reductive classification, and there are way more subtleties within each control method—but overall, mixing these two types of signals in various ways presents a near-infinite field of possibilities for interaction with electronic instruments.

Controller Types

In order to make sense of the abundance of controllers and understand which one(s) best fit particular applications, some sort of categorization seems like a worthwhile solution. Thinking about it, I came up with four distinctive groups: imitative controllers, pad/grid controllers, alternative controllers, experimental controlers. Let's have a closer look at each.

Imitative Controllers

This category includes any controller with an interface that is inspired by the acoustic, electroacoustic instruments, and other gear. An example of such is, of course, the overwhelmingly widespread keyboard-shaped controller. Keyboard controllers are convenient primarily because the majority of people are at the very least familiar with the common arrangement of five black and seven white keys across a few octaves, and the data coming from a press of a button is much easier to extract than, say, from a vibration of the string. However, it is not necessarily the best way to capture certain subtleties of the performance like tremolo or vibrato (that was addressed with an almost universal addition of a pitchbend and modulation wheels to most keyboards, as well as recent spread of MPE technology). Also, if you are trained on a different instrument, you may prefer to retain your technique and an overall playing comfortability when dealing with synthesizers. As such, over the years, controllers have taken the shape of guitars (i.e. SynthAxe, Misa Kitara and Tri-Bass, textArtiphon Instrument 1), drums/percussion (i.e. Nord Drum, Keith McMillen BopPad, Roland SPD-SX), and wind instruments (i.e. Akai EWI, Roland AE-10 Aerophone).

Another group of controllers that fit this category are those that replicate the layouts and design of electronic music hardware like mixers and console desks (i.e. Korg NanoKontrol2, Presonus FaderPort 8, Faderfox MX12), DJ gear (i.e. Native Instruments Traktor Kontrol S3, Numark DJ2GO2), and even guitar pedals/multi effect processors (i.e. Keith McMillen Softstep 2, Tech 21 MIDI Mongoose).

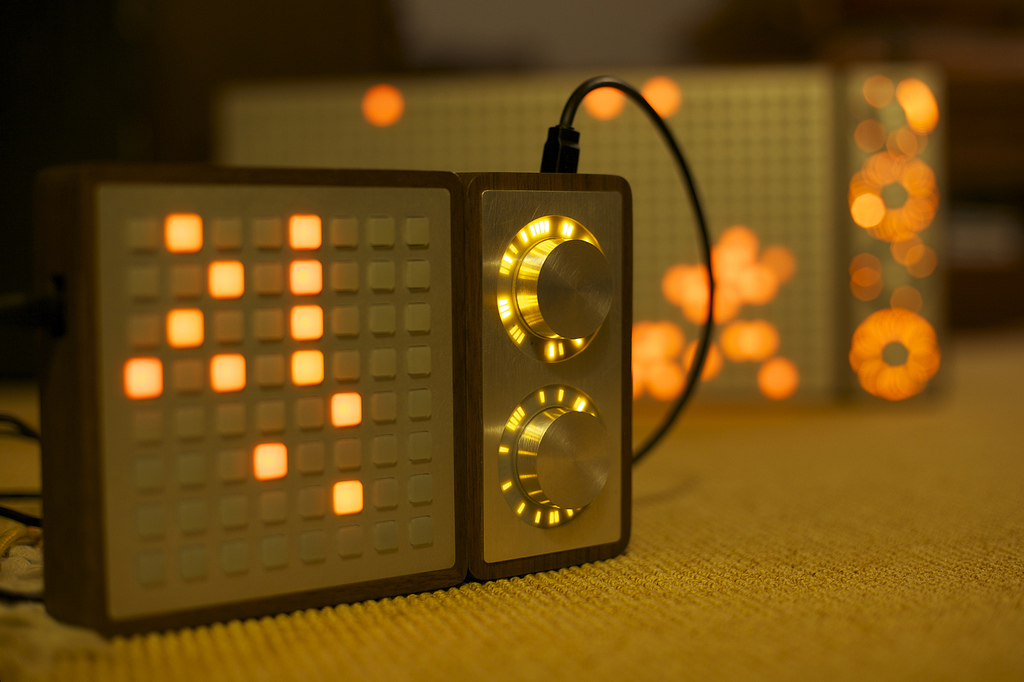

Monome Grid 64 and Arc

Monome Grid 64 and Arc

Pad/Grid Controllers

The emergence of pad controllers can be traced to Roger Linn's invention of the iconic sampling workstation—Akai MPC. Its 4x4 square layout of 16 pads became a staple for pad-style MIDI controllers, and is still widely used today. And not only did MPC and MPC-style controllers become de facto instruments for beat-driven music genres like hip-hop, but they have also served as an inspiration for the development of powerful software like Ableton Live, and later blossomed into the development of grid controllers spearheaded by the boutique Upstate New York company Monome.

By expanding the pad layout of MPC into the grid of 64, 128, 256, or 512 programmable buttons Monome's Grid presented a controller solution that was much better suited for sequencing than MPC. Furthermore, the open source philosophy of the company opened up the platform to the community of developers who created a variety of applications ranging from clever arpeggiators, and complex sequencers to comprehensive DAW controllers—specifically Ableton Live, as Monome's grid layout fit its clip-based architecture like a glove. This later influenced creation of more commercially available controllers like Akai textAPC40, Novation Launchpad, and of course, Ableton's own Push.

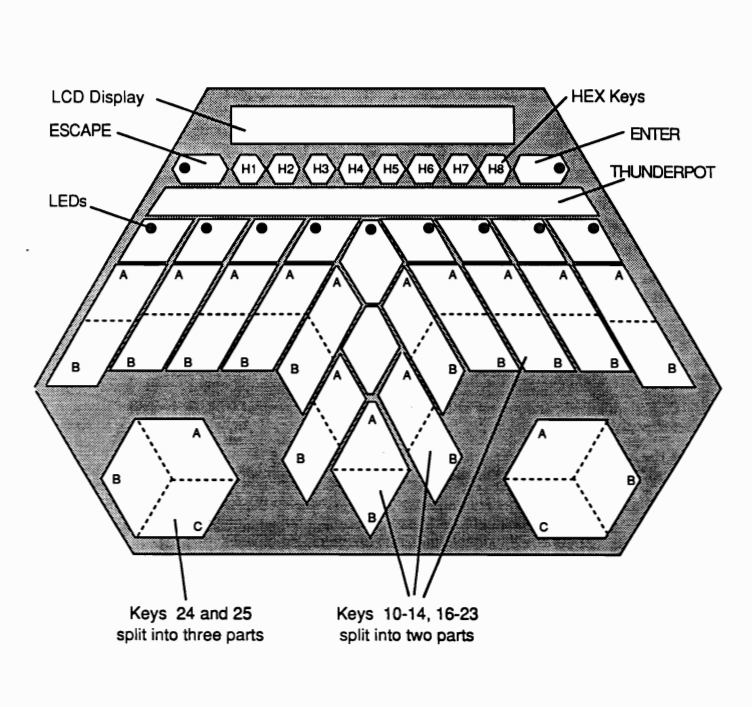

Buchla Thunder graphics from the original manual

Buchla Thunder graphics from the original manual

Alternative Controllers

When we use the term "alternative," it always stands as in comparison to something else. In many respects, Monome's Grid did present an alternative solution to a keyboard controller, and even pad controllers inspired by MPC, however this specific format has gained such an overwhelming popularity that it carved its own little niche. So in this section we are going to talk about controllers that are unique in their own right.

Perhaps one of the most widely known and long-used alternatives is the ribbon controller. It existed since the dawn of electronic music (i.e. Ondes Martenot, Trautonium), and to this day remains a great way to make sounds with a synthesizer. Ribbon controllers excel when you need to smoothly slide between notes or values. It can also be used in tandem with a keyboard as a secondary controller. This has been a relatively common feature in synthesizer design, appearing on a variety of instruments from Moog's early modular systems to the Yamaha CS-80—and these days, ribbon controllers are produced by Doepfer and Eowave, and they can still be found featured as part of synthesizer as in ASM Hydrasynth.

Touchless controllers are also gaining more and more popularity, and similarly to ribbon controllers, they have their roots going all the way back to the early 20th century—namely in one of the first electronic musical instruments, the Theremin. While the technology of touchless controllers varies, the performance principle remains the same, and it is deceptively simple: the sound is controlled by hand movements in the air. In a classical approach, as in the theremin, moving your hands around two radio antennas the performer alters pitch and volume of a tone...however, by decoupling the control element from the instrument, the hand gestures can be mapped to any parameter of the sound. Doepfer's A-178 module provides such an interface in the Eurorack format. Similar results can be achieved using now widely available proximity sensors. Koma Elektronik's Kommander is an example of such. Some controllers take this idea to a whole different level, implementing sensors that recognize subtlety of individual gestures like tilt, vibration, roll, etc.

Another historic controller type that has recently seen a revival is capacitive touch controllers. These controllers are often composed of a series of conductive plates in various arrangement options. Each plate is usually tuned to a specific voltage, and is also capable of extracting a few other types of signals from touch: gate, pressure (the amount of skin touching the surface), and in some cases position (where the finger is on the plate). This approach dates back to Buchla's earliest modular instruments—and some great modern examples of such controllers include the Verbos Electronics Minihorse, Make Noise 0-CTRL and Pressure Points, and Random*Source Serge TKB.

A particularly interesting controller in this realm is Manta from Snyderphonics. Its 6x8 grid of 48 touch sensitive plates can be infinitely programmed for a wide variety of applications. The plates are velocity and pressure sensitive, which promotes high degree of expressivity. Isomorphic by design, the controller is especially great for performing microtonal music. Rather than using the common MIDI protocol, Manta transmits flexible serial HID data which can be further translated into MIDI or OSC messages via dedicated applications, or to CV/Gate with the help of companion module Manta Mate.

Capacitive sensing is also applied in multi-touch technology employed by modern smartphones and tablets. Speaking of which—there are several applications that allow users to create completely customized controller layouts, the most popular ones being Liine Lemur (ported from the groundbreaking hardware controller created and distributed by the french company Jazzmutant in early 2000s), and TouchOSC. This concept of convertibility is now re-envisioned with a couple hardware controllers such as the Sensel Morph and Joué Board, which allow users to dynamically swap silicone controller overlays on a multi-touch sensing platform. Regrettably, both the Morph and Board have recently been discontinued.

It is also worth mentioning that both touch sensing and touchless technologies were widely explored by the synth pioneer Don Buchla, manifesting in such extraordinary controllers as Thunder, Lightning, and Marimba Lumina.

Also one more controller that belongs to this group, yet remains difficult to categorize is the multidimensional Touché by Expressive E. While in some senses it is similar to a joystick with an added pressure sensing feature, it stands out by offering a very natural spring-like response. Similar technology is implemented on a key-by-key level in their upcoming Osmosé, an MPE-enabled keyboard that produces independent X, Y, and Z touch data from every single key.

Alvin Lucier Performing Music For Solo Performer

Alvin Lucier Performing Music For Solo Performer

Experimental Controllers

When the term "experimental" is attached to the description of anything, it always adds a flavor of mystery and enigma. So far we've explored a vast array of controllers for electronic music instruments which should cover the needs of most performers—however, there is always room to explore. The category of experimental controllers includes devices and approaches that employ novel ways of interaction between the sound and the environment. I say environment because, in some of these cases human presence is not even necessarily, as in this category we are primarily interested in mapping reading from all sorts of sensors to the parameters of sound and music.

For example, we can use biosensors to interpret changes in different parts of our bodies to sound. This is not necessarily a new concept, but it has only been used sparsely throughout electronic music history. For example, Alvin Lucier's Music For Solo Performer composed in 1965 maps brainwaves to sound via an electroencephalograph (EEG). It surely is interesting to hear our brain activity, yet other parts of our bodies can be as exciting to explore for musical purposes. Heart rate, electrodermal and muscular activity, joints bending—all are excellent sources. More over, listening to the results of our own internal bodily processes can result in a novel field of exploration called biofeedback (pioneered by such composers as David Rosenboom): by hearing our brain waves control a synthesizer, for instance, the patterns in our brainwaves naturally change behavior, creating a cascading set of patterns that shift as our attention drifts into different levels of focus.

The world around us can also provide an inexhaustible amount of control data, measuring everything from air humidity and temperature, amount of light, rotation of the planet, etc. Working with sensors often entails dealing with computers, and microcontrollers, but there are a few devices that are designed exactly for the purpose of converting sensor data to the musically usable signals. For example, Instruo's Scion module converts small electrical signals happening in flora and fauna into the synth-friendly CV/gate. Koma's Field Kit is equipped with a sensor interface that can interpret and convert data from a wide variety of sensors. Similarly, Eowave's EO-311 can convert data from up to four different sensors into control voltage signals.

Mapping Strategies

Now that we've explored the breadth of controllers, it's time to touch upon the concept of mapping that has already been mentioned several times within this article. In general terms, mapping simply means assigning the values coming from a particular element of a controller (like a knob or a fader) to a desired parameter of your electronic musical instrument. This process can be very personalized, and preferences may vary from person to person depending on many factors like genre of music they are performing, what software or hardware they are using, or even nuances of the technique they developed. However there are few points worth mentioning that can benefit most people.

First of all, subtleties matter; and it is not always necessary or desirable to map the controller element to the full range of the parameter. Attenuating and/or reversing controller output is a common practice, and it is highly recommended.

Secondly, a single control element may be used for multiple parameters. This is a great way to create a complex sound using a simple gesture. As mentioned previously scaling the output per parameter will be useful here.

Processing control signals before they reach the desired parameter can also be very useful. For example, adding a long delay to the controller element or running its output through the probability function can yield exciting results that are unpredictable and fulfilling. Or, perhaps abstract the original control signal altogether: instead of using the control signal directly, find a way to assess its rate of change, or to detect when it goes above or below a threshold, or detect how long it has been active. The world of mapping strategies is deep and full of new territory for exploration—allowing us to create entirely new rules and conventions for interacting with our instruments.

As you can see, there are myriad ways to interact with electronic musical instruments. This article certainly doesn't exhaust the topic of music controllers, but hopefully it at the very least covers the breadth of the subject and gives you a glimpse into the immense possibilities offered by decoupling an instrument's user interface from its sound production method. Perhaps we will take a deeper look into individual controllers in future articles.