The past several years, "MPE" has become something of a buzz word in the world of electronic music performance and production...but what is MPE? In short, MPE (MIDI Polyphonic Expression, or Multidimensional Polyphonic Expression) is an extension of the MIDI protocol that allows for extended expressive opportunity, allowing players and producers a degree of control difficult to achieve with standard MIDI implementations alone.

MPE has taken some time to catch on—but given its adoption into official MIDI specification, the last several years have seen a boom in the production of MPE-compatible controllers, synthesizers, and software. Today, MPE is a standard feature of most industry-standard DAWs, plugins, and even synthesizers such as ASM's Hydrasynth, Modal's Cobalt8 and Argon8, Black Corporation's synthesizers, and others. And with the vast availability of MPE controllers (Sensel's Morph, Roger Linn's LinnStrument, Keith McMillen's QuNeo, QuNexus, and K-Board Pro, just to name a few), now is an excellent time to start figuring out how MPE can fit into your personal music-making practice.

So now that we know in broad strokes what MPE is, we can start tackling bigger questions: where did it come from? How does it work? What is it good for? And to answer all of these questions, we should first take a look at where things started: with the development of MIDI altogether.

Where Did MIDI Come From?

MIDI was formalized in 1983. Just a few years prior, digital technologies had begun to rapidly find their way into electronic musical instruments, and simultaneously, computers were gradually becoming part of the world of recording and music-making. It had become abundantly clear that, for the state of the music industry to continue to evolve in a positive direction, instrument makers needed to band together in order to define a universal communication protocol—an instrument-specific language of sorts that would allow different manufacturers' equipment to communicate seamlessly with one another, and allowing for easy connection between musical instruments and computers. After much back-and-forth between a large committee of manufacturers, Roland and Sequential Circuits spearheaded the development of MIDI—and surprisingly, over the last 40 years it has remained fundamentally the same.

We should take a moment to note how incredible it is that the majority of the big players in the music industry actually came together to agree on a standard of production—this was a huge deal. Moreover, it's incredible that it has persisted for so long: the very first MIDI-capable devices are still very much compatible with other instruments produced today.

All that aside, MIDI wasn't a solution to every musical problem: in fact, it created many problems of its own. Many of these were easy to overlook at first given all of the new possibilities it allowed, but as time has gone on, some of its deficiencies have become more apparent. We detailed some of MIDI's pitfalls and early attempts to "correct" them in our Brief History of MIDI article, but today we're going to hone in on MPE, and the problems it seeks to solve.

A Quick MIDI Refresher

To understand what MPE is for, we need first to understand a little bit about how more old-school MIDI implementations typically work. If you've used a MIDI-equipped synthesizer, controller, or music production software before, a lot of this should be familiar to you—but perhaps we can shine some light on a couple of shortcomings that you may or may not have considered before.

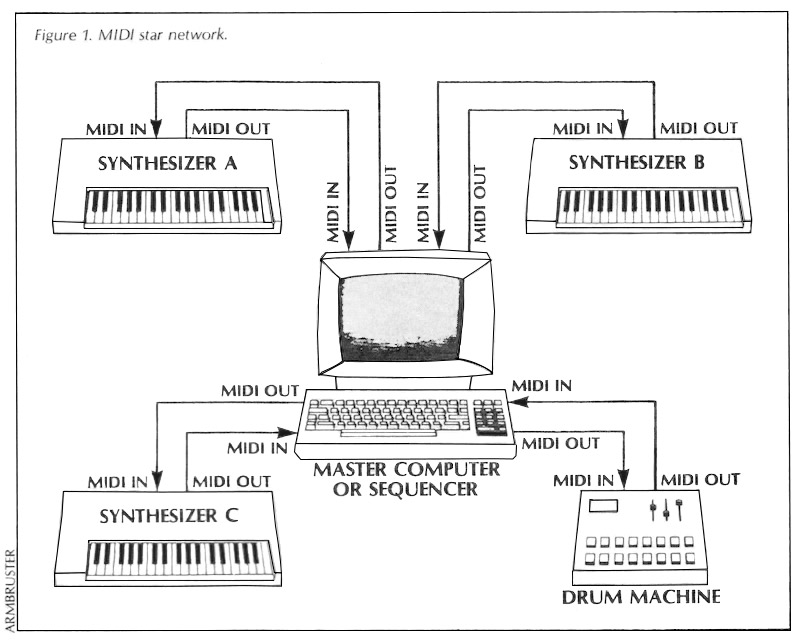

MIDI as a protocol is designed in such a way that it can act as a network of many interconnected devices. This was an important consideration from its very inception: after all, manufacturers wanted to ensure that it would be practical for musicians to have entire studios full of MIDI-compatible instruments. Because of that, MIDI is a multi-channel protocol: it can be used to send and receive data to/from distinct devices while maintaining a level of independence between these devices. Let's consider a fully MIDI-equipped studio to explore this idea.

A MIDI network diagram, from July 1983 Keyboard Magazine article by Bob Moog

A MIDI network diagram, from July 1983 Keyboard Magazine article by Bob Moog

The core of your MIDI-capable studio is most likely a computer or sequencer. This computer or sequencer contains a MIDI port: a point of arrival/departure for incoming/outgoing MIDI data. A MIDI port (be it in a computer, sequencer, etc.) is itself comprised of sixteen distinct MIDI channels: bidirectional lanes of communication that allow us to send data freely between the computer/sequencer and individual connected instruments/devices. On each device, you can specify the channel (1–16) on which that device sends/receives MIDI data—and by sending data on specific channels from your computer/sequencer, you can independently address each of your individual instruments.

So that's simple enough—but what's all this talk about MIDI data? What information can you communicate via MIDI? Well, the most common data are MIDI notes: simple messages that indicate the beginning, ending, and even the velocity (speed of key strike) of individual notes—the type of data we usually see in a piano roll on a DAW. Velocity and pitch are established per event: each new note has distinct pitch and velocity independent from those before and after it.

There are other types of data we can send too, though: CCs (control change, or continuous control messages). Every MIDI channel in a port has 128 CCs. You can think of CCs as individual lanes for control of specific parameters. These parameters can range from internal system settings to specific aspects of a synthesizer's internal architecture: for instance, you might be able to use a CC to define a filter's cutoff frequency, or a delay rate, etc. In the world of MIDI controllers, CCs are usually sent from hardware control sources like knobs, faders, joysticks, or buttons, and they can be related to most any parameter in most synths, DAWs, etc.

So What's Wrong with MIDI?

While there are some conventions for the use/implementation of CCs as per-parameter controls, largely their musical function varies instrument-by-instrument, with the manufacturer deciding how best to use them in their specific devices. The catch is that CCs act at the level of the channel as a whole: CCs are not per-note messages, and for the most part, changing a CC when multiple notes are sounding will affect each sounding note equally. You've probably had this experience with pitch bend, for instance—pitch bend is a special CC typically reserved for relating the movement of a wheel or joystick to a synthesizer's global pitch. On most polyphonic synthesizers, if you move the pitch wheel while playing a chord, all notes will bend equally...because, as we said, CCs govern the behavior of an entire channel, above the scope of individual notes.

We can only assume that this basic truth about CCs is due to a combination of ease of implementation and the fact that the original polyphonic synths took direct design inspiration from the most successful monophonic synthesizers that preceded them. Look, for instance, at the similarities between the layout/user interface of the Moog Minimoog Model D and the Sequential Circuits Prophet-5: despite the fact that the Prophet-5 is polyphonic, it basically copies the layout and UI of the monophonic Minimoog...sidestepping a huge number of questions that likely emerged in the process of its design. One knob per parameter is quite natural on a synthesizer that can only play one note at a time—but hypothetically, if your instrument has multiple internal voices, it's very possible that each voice could have a different wave shape, different filter settings, different envelope shapes, etc. (see the Oberheim Four Voice for an example of an instrument that still maintained independent timbre settings for each individual voice).

Instead of maintaining this level of independence between voices when designing the Prophet-5, though, Dave Smith took a simpler, more affordable, and in some ways much more savvy approach by making it such that the panel's knob and switch settings applied to each voice equally. So when it came time to formalize the MIDI protocol (only a few years after the P-5's introduction), Dave Smith and co-conspirators seemed to take a similar philosophical approach: we can think of CCs as being like the Prophet-5's knobs...adjusting a CC that relates to a filter's cutoff frequency will adjust the cutoff for all voices. Of course, we aren't downplaying MIDI's significance here—at the time, it was much more important to get a working specification together, and solving philosophical questions about how instruments should work was a secondary concern to the more pragmatic issue of simply building something functional and relatively future-proof.

This truth, though, still has had a profound impact on the capabilities of electronic musical instruments: in these early days, sidestepping the question of how best to design a polyphonic instrument and a desire to conform with MIDI (the then-new de facto standard in instrument intercommunication) meant that this question wasn't re-approached in the mainstream for many years.

For a while, this was okay—many instruments did have internal means of enacting finer per-note control and simply used MIDI as a translation method for incoming and outgoing data. But along with the advent of rackmount synthesizers and software synthesizers, it became more and more common for musicians to primarily play their synthesizers/computers via external controllers—and at that point, you are completely limited to MIDI's own control structure. And while you can go a long way with velocity and other CCs, this form of control lacks the per-note individual expressivity possible with, say, and acoustic instrument. On a guitar, for instance, each string can be bent individually, and each note can have vastly different timbre/articulation based on how it picked. Moreover, the acoustic guitar doesn't rely on knobs or sliders for global timbre controls: all of the control comes from the player's hands. Many musicians and engineers sought to find a way to bring this level of expressive control to musical instruments, often by proposing their own alternative instrument communication protocols (like McMillen/Wright/Wessel's ZIPI, Buchla's WIMP, etc.)...but eventually, clever minds began to wonder if MIDI actually already provided the tools one would need to implement per-note expression. Eventually, they realized that it did.

How Does MPE Work?

Before MPE, there were brave instrument designers that worked to build new electronic instruments from the ground up, without regard to prior building conventions or standard communication protocols—we can look to Lippold Haken and John Lambert, for instance, whose Haken Continuum and Eigenharp (respectively) focused instead on unique internal control protocols. Their instruments were designed expressly for the purpose of offering musicians a refined level of per-note expressive control, allowing independent pitch bend and timbral control for each individual tone. In the Continuum, for instance, a three-dimensional touch surface allows musicians to use X, Y, and Z touch axes (as well as velocity, etc.) to address a sophisticated internal sound engine, allowing for a highly expressive experience without even needing to use MIDI (though of course, MIDI operation is possible on the Continuum!).

A gut suspicion about MIDI's shortcomings along with experience playing instruments like Eigenharp led instrument designers and software engineers (including Geert Bevin, who would eventually go on to develop the LinnStrument's firmware) to imagine a new alternative to controller design. Really, the answer had been right there all along: what if you could build a controller where each new keypress was on a new MIDI channel? What if you could build software instruments or hardware synths that could then address their internal sound engines by listening to multiple MIDI channels at once?

That is precisely how MPE works. First commercially presented as part of Roger Linn's LinnStrument and the Roli Seaboard in 2014–2015, this control scheme makes it such that each keypress can send completely independent pitch bend and CC data in addition to standard note on/off and velocity messages. By using a separate MIDI channel for each subsequent note, the pitch bend/CC values associated with one note do not affect the next—instead, new notes are allocated to the next free MIDI channel, maintaining full independence between voices.

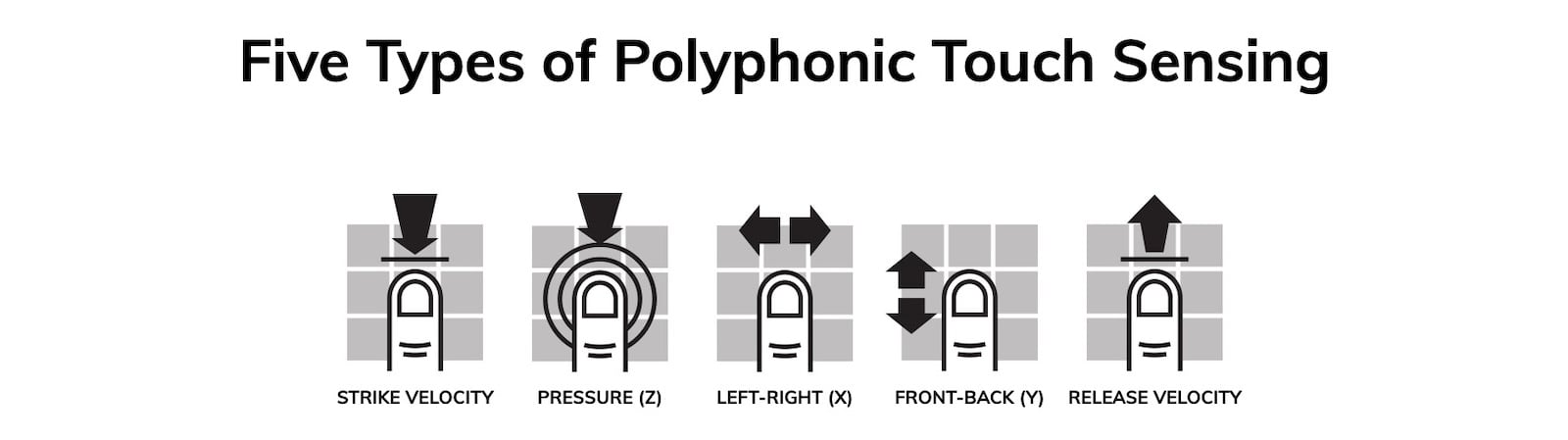

It's worth noting that the playing experience on most MPE controllers is intentionally quite different from other approaches to synthesizer control: as we've said, most synths rely solely on knobs/sliders/buttons/joysticks/wheels for control of pitch bend and timbre, with the playing surface (usually a keyboard, pad controller, or similar) dedicated to producing note events. MPE controllers, though, intentionally couple timbral control to the same playing surface that tells notes when to begin and end, enabling a playing experience much more like our acoustic guitar example above. To that end, most MPE controllers use some manner of non-mechanical "key" with sensors that can detect five primary playing "dimensions": note onset velocity, note release velocity, and your finger's position on the key in X (horizontal), Y (vertical), and Z (pressure) dimensions—and as such, each of these streams of data can be used to define some aspect (or several aspects) of the sound's timbre. Most often, the X position is mapped to pitch bend, allowing for intuitive vibrato (or glide effects)—but each of these control streams can be mapped to any available parameter.

For the first few years it existed, though, MPE faced a unique challenge: for it to truly work, there needed to be controllers, software, and hardware instruments that could utilize it. Controllers were the first commercial devices available to employ MPE—after all, the very nature of this new control scheme was entirely about extracting expressive control data from performers—but luckily, it was only a short time before software and hardware designers to catch on and build MPE capability into their own devices. Because of the ease of implementation, software came first...but in the present day, MPE compatibility is a common feature of new hardware synthesizers. That in mind, let's take a closer look at some of our favorite MPE controllers and MPE-compatible instruments to give you a sense of where you can look for interesting control sources and what you can do with them.

MPE Controllers We Love

Roger Linn with a prototype LinnStrument (image via Cool Hunting)

Roger Linn with a prototype LinnStrument (image via Cool Hunting)

A discussion of MPE wouldn't be complete without credit to Roger Linn, a veteran figure in the world of electronic instrument design. Famous for his significant contributions in the world of drum machines and sampling with the LinnDrum and Akai MPC series, he's no stranger to making significant breakthroughs in music tech—and his LinnStrument follows suit. LinnStrument is a grid-based controller that takes full advantage of the now standard types of polyphonic touch sensing that MPE provides: strike velocity, pressure (Z axis), left-right movement (X axis), front-back movement (Y axis), and release velocity. Roger Linn holds a patent on the sensor technology used, and despite having no moving parts, it feels immediately responsive and expressive to play. It's worth noting here a very special touch—X axis movement when mapped to pitch bend (the typical behavior) actually bends the pitch proportionally to your finger position, allowing you to create seamless portamento pitch glides while maintaining a clear, accurate pitch reference, and without the need for a separate playing surface for "keyed" playing and continuous pitch glides (as with many other MPE controllers).

LinnStrument comes in a couple of varieties: the original LinnStrument, with 200 individual touch pads, and the LinnStrument 128, a smaller offering with—you guessed it—128 individual pads. Each pad is backlit with RGB LEDs, which is a significant feature given its overall flexibility and programmability: it offers an internal sequencer, arpeggiator, split capability, row/column CC fader capability, low row CC/bend/etc. functionality, and much more. Even if you're not using an MPE-capable instrument or software, Linnstrument has a ton to offer to basically any musician.

Given its grid-style layout, playing the LinnStrument might take a bit of getting used to for keyboardists—but its default layout should be quite familiar to guitarists and bassists, with each row of pads ascending in tuning by a perfect fourth (in fact, you can even add a strap to play the LinnStrument in keytar/Chapman Stick-like positioning). Of course, it can be tuned in a large number of ways, including modes with no overlap between rows, octave offset between rows, and much more. All settings can be accessed from the Linnstrument's control surface itself with the aid of a front-panel printed menu system and several dedicated function keys, so despite its complexity, it's actually quite easy to navigate. Also worth note—Linnstrument does have DIN input/output and MIDI via USB, making it easy to integrate with basically any setup you like.

Another favorite MPE controller among Perfect Circuit staff is the now-discontinued Sensel Morph. The Morph takes user customization to an entirely different level: by itself, it is a bare touch surface with no discernible user interface aside from a blank, black touchpad roughly the size of a tablet. And while it actually can be used in this state, the Morph's true genius lies in its use of swappable overlays.

The Morph's overlays are thin pieces of molded silicone that attach to the Morph touch surface magnetically. The Morph can immediately recognize specifically which overlay is connected to it, as well as the user's preferences for how that specific overlay should behave (achieved through use of a desktop editor application, which we'll come back to in a moment). When in production, their range of overlays included a piano-like layout, "music production" layout with pads, sliders, and knobs, a Buchla Thunder-inspired layout, a drum pad layout (yes, it can withstand the force of drumsticks), and even overlays for gaming, video editing, and more.

The trend toward hyper-expressive compact controllers definitely didn't begin with the Morph, though: in fact, one of the earliest sets of MIDI controllers that I personally became enamored with as a growing musician were those from Keith McMillen Instruments—the present-day company of Zeta/G-WIZ progenitor Keith McMillen. McMillen has a long history of work in the field of controller design, even having worked with Buchla on the original Thunder...and his sensibilities eventually led to the release of the hyperflexible QuNeo pad controller in 2011. QuNeo was way ahead of its time—each of its pads can act as multi-touch control surfaces in order to produce MIDI notes, velocity, and CCs that correspond to the X, Y, and Z touch axes—in fact, pads could even be divided into four distinct note regions, effectively turning its 16-pad touch surface into something more like a 64-pad surface. A handful of other assignable touch controls enable slider, button, and knob-like behaviors, making it—at its time—one of the most powerful and user-customizable all-in-one MIDI controllers around. The QuNeo was soon followed by the QuNexus, a portable keyboard controller with similar multi-touch expressive potential.

And frankly, the QuNeo and QuNexus have only grown more flexible over time. In 2021, Keith McMillen introduced new versions of these instruments—the QuNeo Red and QuNexus Red—which offer MPE support, following the precedent established by the K-Board Pro (first announced just a couple of years ago). These controllers take a somewhat more traditional approach in terms of layout than the LinnStrument or Morph, and they should prove to be immediately accessible for finger drummers and keyboardists alike, bringing the MPE touch to an even wider audience.

Of course, this isn't an exhaustive list—there are plenty of other MPE controllers out there, like the Embodme Erae Touch, Jamstik Studio MIDI Guitar (yes, it's an electric guitar and a MIDI/MPE controller!), Artiphon Instrument 1 (with its own take on a guitar-inspired interface), and others. No matter what your preferred playing interface, there's likely an MPE controller that will make sense for your own musical purposes—check out our full selection of expressive controllers for a taste of what's out there!

MPE-Capable Hardware Synthesizers

Of course, while many DAWs now support MPE (including Ableton Live and Apple's Logic), it's fair to say that we at Perfect Circuit are fond of the experience of playing physical instruments. And while it took a few years for MPE to really find a foothold in the world of hardware synths, once MPE was officially adopted into the MIDI specification, the floodgates opened with all sorts of creative explorations into the potential of MPE-enabled instruments.

The Hydrasynth Desktop and Keyboard

The Hydrasynth Desktop and Keyboard

Certainly one of our favorite such instruments in recent years is ASM's Hydrasynth. While the Hydrasynth's sound engine was developed to leverage its own built-in polyaftertouch-capable keyboard, eventual firmware updates made it possible to use external MPE controllers for even more extended control. Now available in four distinct variations (Keyboard, Desktop, Explorer, and Deluxe)Deluxe), the Hydrasynth is a perfect candidate for MPE control: it has a remarkably deep synthesis engine with a boatload of continuously variable parameters that affect the timbre...and the vast majority of these parameters can be addressed via the (extensive) internal modulation matrix. This means that it's actually quite simple to directly relate the five streams of MPE data to most any parameter of the sound engine, bringing instant color and life to even the simplest patches. Try using the MPE Y axis to control Wavescan and key pressure to control filter cutoff and you'll instantly have a profoundly expressive sound. And of course, since the Hydrasynth's mod matrix allows you to map any control source to many different destinations at once, it's possible to use a single MPE dimension to control many aspects of the instrument's sound—allowing you to make considerable alterations to the instrument's timbre note-by-note simply by altering your physical playing style...no knobs, benders, or ribbons required. Pair the Hydrasynth Desktop with any MPE controller and you have a remarkably expressive instrument with a huge sonic range.

Black Corporation's synthesizers are another great place to look for MPE-oriented synthesis: each of their instruments offers a unique take on a specific vintage synthesizer, complete with extended expressive control. Deckard's Dream is a modern take on the Yamaha CS-80, while Kijimi models itself after the RSF Polykobol, and the Xerxes after the Elka Synthex. While the MPE routing options aren't as extensive on these instruments, they each still provide a profoundly satisfying playing experience and are quite easy to set up for MPE control: for each, front-panel pre-assigned controls allow control for depth of the MPE assignment to specific parameters, allowing for everything from subtle inflections to touch-controlled dive bombs and deep modulation.

Of course, many other synths offer MPE support now as well: Sequential's Prophet-6 and OB-6, Modal's Argon8 and Cobalt8, Modor's NF-1...the list is constantly growing, and MPE is gradually becoming a fairly standard feature of new instruments. While none of the instruments we've discussed were designed with a built-in MPE playing interface (instead requiring an external controller for MPE exploration), developments on the horizon show promise that fully integrated MPE-capable devices may become more common. The forthcoming Expressive E Osmose, for instance, features a mechanical keybed with full MPE capability, using the same internal sound engine as the presently available Haken Continuum—and given how spectacular the Continuum playing experience is, we're excited to see how it translates with Expressive E's ingenious design.

New Dimensions

MPE is still in its early years—but happily, there's already plenty of territory to explore. Try picking up a Morph and controlling Ableton Live 11's Wavetable synth, or find yourself a Hydrasynth and Linnstrument—heck, get a QuNexus Red and a copy of Madrona Labs Aalto. You'll find that the experience of playing electronic sounds feels more intimate and personal than you could've possibly expected. It allows you to get away from the world of turning knobs and pressing buttons for controlling timbre, instead coupling various aspects of a sound's unfolding to a single action from the user: resulting in an uncanny experience where your touch almost magically seems to translate into changes in sound.

If you're the type of musician who is looking for deeper hands-on control of your sounds, or a sound designer looking for new opportunities for intricate sonic sculpting, take some time to try things out. MPE provides the raw ingredients for an expedient, expressive, and intuitive approach to sound manipulation, and happily, several years into its development, it seems clear that it is here to stay.