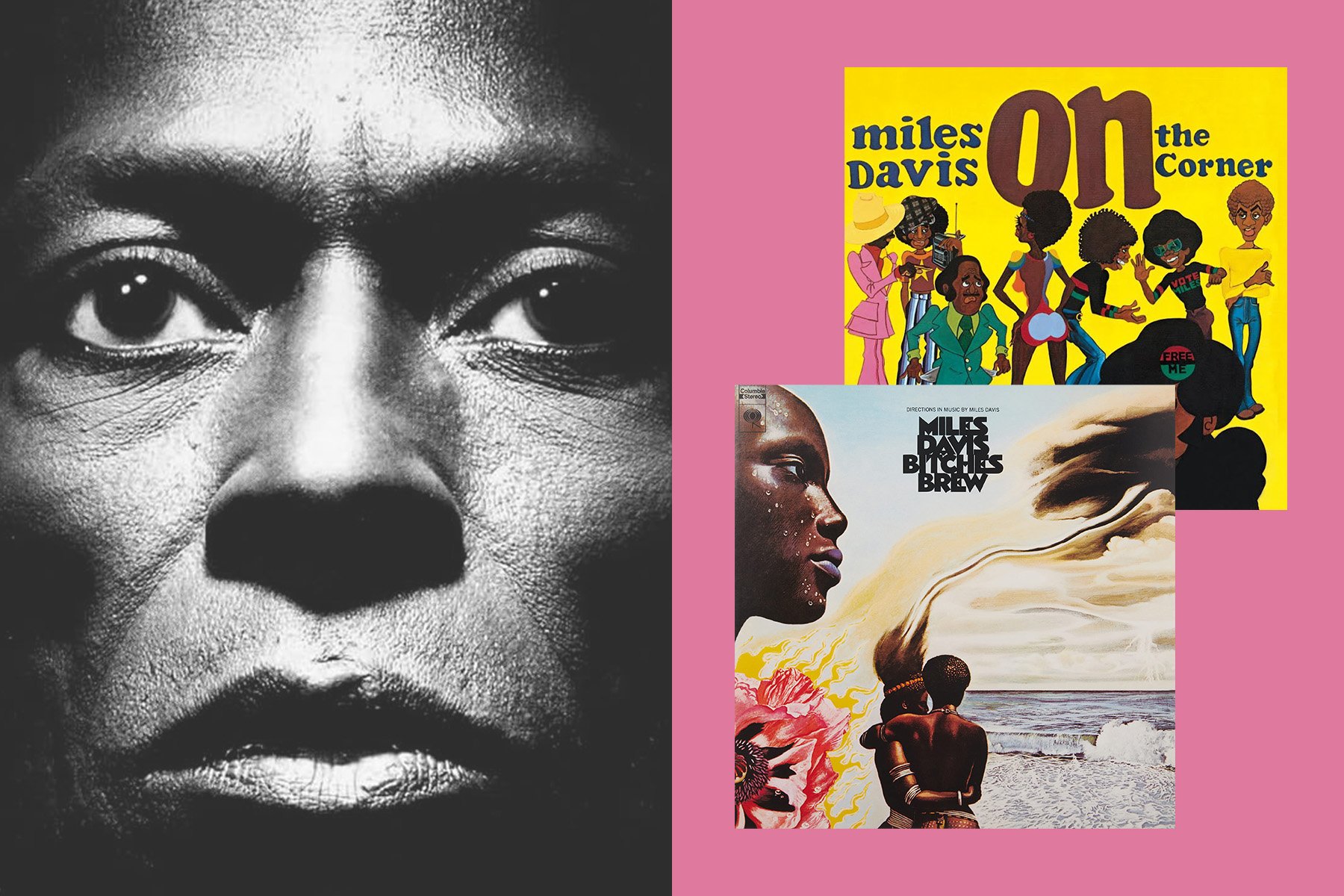

It's difficult to imagine a world without MIDI—the Musical Instrument Digital Interface that allows synthesizers to talk to computers and with each other. But that was the reality until 1983, when an unprecedented act of bipartisan collaboration between competitors heralded perhaps the most important musical technology of the century: a common language available for all electronic instrument developers.

Why Standardize Instruments?

In the 1970s, expensive instruments from ARP, Moog, and others had brought synthesis to deep-pocketed specialists. Technology was advancing, and with the advent of instruments like 1978's Korg MS-20, which cost just $750, synthesis was moving toward a wider market.

At this point, there were already ways of communicating between instruments. The most widespread was control voltage (CV), but back then even CV-capable instruments from multiple manufacturers were not always directly compatible with each other: differing standards for CV and gate/pulse scaling and signal connector types kept most manufacturers' gear segregated from one another.

Sequential Circuits Prophet-5: the first commercial instrument with an integrated microprocessor.

Sequential Circuits Prophet-5: the first commercial instrument with an integrated microprocessor.

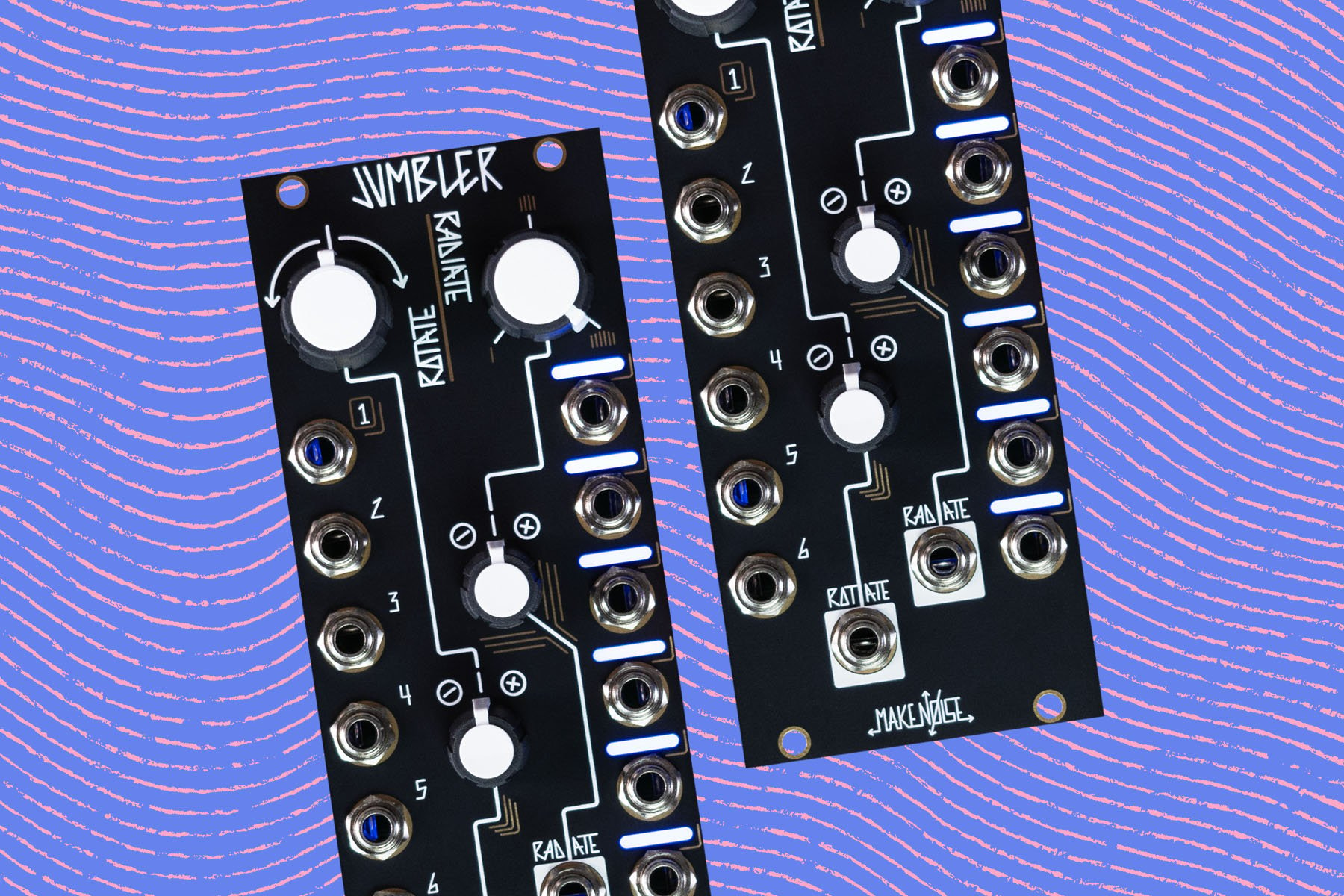

A desire for standardized digital control came about in tandem with the rise of polyphony. Polyphony in modular systems was difficult and tedious, as a CV and gate signal was required for each voice...and implementing this approach in non-modular instruments was difficult given the nature of discrete analog components and simple integrated circuits. Microprocessors changed the nature of instrument design: because they were inherently re-programmable general purpose devices, they could be adapted to a huge range of applications far more easily than more low-level electronic components.

The widespread availability of affordable microprocessors meant that manufacturers could turn away from typical controller implementations toward entirely new means of managing user interaction and voice allocation. By abandoning modular-style CV and gate schemes in favor of working with microprocessors, polyphonic instruments like the Sequential Circuits Prophet-5 became possible—but the lack of standards for how polyphonic data was managed in the early days meant that even a single manufacturer's devices were often not as interoperable as they might have been in the CV/gate days. And of course, the increasing presence of computers in the musicmaking world meant that a desire for a standardized computer interface for musical instruments was desirable.

Of course, adventurous manufacturers were already developing ways of connecting computers and their synthesizers: but in these early experimental days, all approaches were entirely proprietary and often involved the use of relatively expensive connection schemes. And some manufacturers were also tackling the issue of sending digital data between their own instruments...again, with proprietary methods and relatively expensive implementations overall. Among these early digital protocols, for instance, was Roland’s proprietary Digital Control Bus (DCB) Interface—which did find its way into a few products, but had a limited lifespan given MIDI's ensuing introduction.

Because different designers developed their own solutions to these problems in relative isolation, some engineers began to worry about the lack of compatibility between different synthesizer makers. Public demand for compatibility with computers was also increasing, and there were fears that the gulf between manufacturers' methods might inhibit the growth of the synthesizer market as a whole. What the synthesizer business needed was a uniting force—something that could serve as a universal communication system.

Creating a Solution: MIDI

In California, Sequential Circuits President Dave Smith was thinking about a synthesizer interface that could communicate between instruments, regardless of manufacturer. Across the Pacific in Japan, Ikutaro Kakehashi, founder of Roland Corporation, was conceiving of a similar concept. In October 1981, Smith and Sequential engineer Chet Wood presented a paper at the Audio Engineering Society’s national convention. In it, they proposed a concept for a Universal Synthesizer Interface, a sort of MIDI prototype that used regular 1/4" cables.

But in order to make something that would be a universal standard, Smith and Kakehashi realized they needed buy-in from all the manufacturers. They began working together. Kakehashi rallied the Japanese manufacturers, while Smith talked to the Americans. At the National Association of Music Merchants (NAMM) show in February 1982, they organized an international meeting with their peers to present the idea, including representatives from ARP, Moog, Crumar, Voyetra, and Synclavier.

Many of the American companies initially dismissed the idea. The Japanese manufacturers, however, had already learned the importance of a standard. In the 1970s, Sony's Betamax and Panasonic’s VHS had been embroiled in an expensive battle to become the standard video format.

"Sony lost the game," remembered Kakehashi. "Then everybody understood that one standard is so important. So very quickly, they agreed."

An alliance was formed. Smith from Sequential collaborated with Roland, Yamaha, Korg, and Kawai. Together, they went to work developing what would become MIDI. Engineers from Roland worked with engineers from Yamaha, in discussion with Smith back in the U.S. to bring to life the universal protocol for all electronic musical instruments.

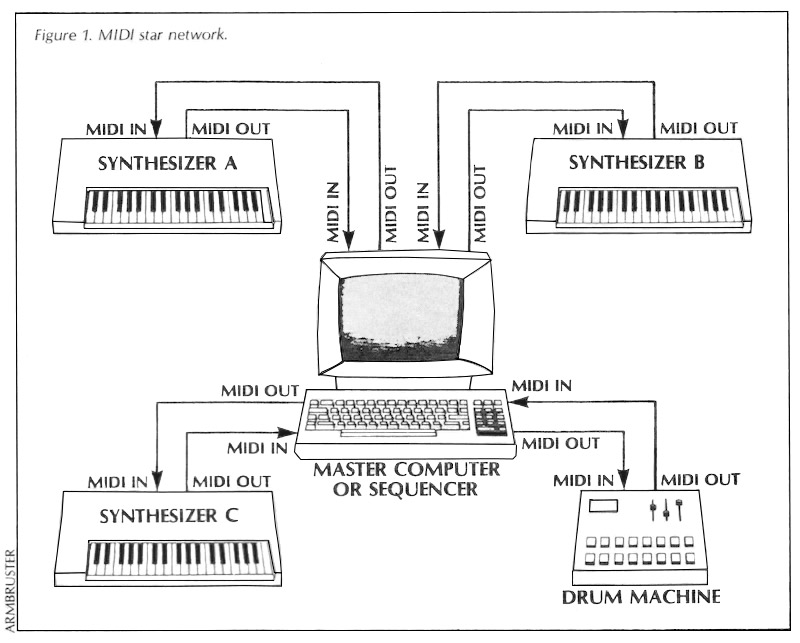

MIDI star network diagram, from July 1983 Keyboard Magazine article by Bob Moog

MIDI star network diagram, from July 1983 Keyboard Magazine article by Bob Moog

In a November 1982 cover interview with Keyboard magazine, Robert Moog announced publicly that Smith and Wood had been working on an invention: MIDI. By December 1982, five-pin MIDI ports made their first appearance on a commercial instrument: The Sequential Prophet-600. A month later, Roland's Jupiter-6 was released with the same capabilities. At January 1983's NAMM, the two instruments (from rival companies) were connected via a five pin MIDI cable.

"Roland brought over their JP-6 and they plugged it into the Prophet-600," remembers Smith. "It was the first MIDI connection—and it worked! You banged on one keyboard and the other keyboard played."

MIDI works by transmitting a digital signal, or event message, which carries basic instructions. A typical MIDI signal contains note start/stop, note number and velocity. It was a technology so simple and cheap that it was irresistible. With Yamaha’s release of the MIDI-functioning DX7 in 1983, three distinct manufacturers had embraced MIDI capabilities. In its first iteration, MIDI could only send the most basic instructions, such as which notes to play. But crucially, Smith and his co-conspirators made MIDI open source, so it could be built upon. The MIDI specification quickly grew to include a variety of more complex operations. Several incremental updates have been introduced across the years—but the basic functions of MIDI 1.0 protocol have remained basically unchanged, and as such, early 1980s MIDI devices are still compatible with modern systems.

"We let all the details get fixed in the marketplace as things were first introduced," said Smith. "Maybe we should have had a testing lab, but the synthesizer market was a tiny market back then."

Buchla's innovative Thunder, from July 1990 Keyboard Magazine Review

Buchla's innovative Thunder, from July 1990 Keyboard Magazine Review

MIDI quickly became a standard consideration in instrument design, becoming a huge influence on the music tech industry. Eventually, MIDI controllers emerged as a new category of instruments altogether, allowing forward-thinking designers to focus on creating new means of user interaction without simultaneously needing to develop a new synthesis engine. And of course, this also led to the abundance of rack-based synthesizers to follow in the 1980s and 1990s. This made it easier than ever before for musicians to choose both a sound engine that inspired them and a way of playing that inspired them, without having to compromise in either realm. Controller types ranged from keyboards and drum pads to more novel devices, such as Buchla's Thunder and Lightning controllers, AKAI's EWI series, and more; rack synthesizers ranged from condensed versions of bulky polysynths to samplers and beyond. And of course, Digital Audio Workstations (DAWs) evolved to integrate MIDI, allowing compatibility between home computers and all of these developments.

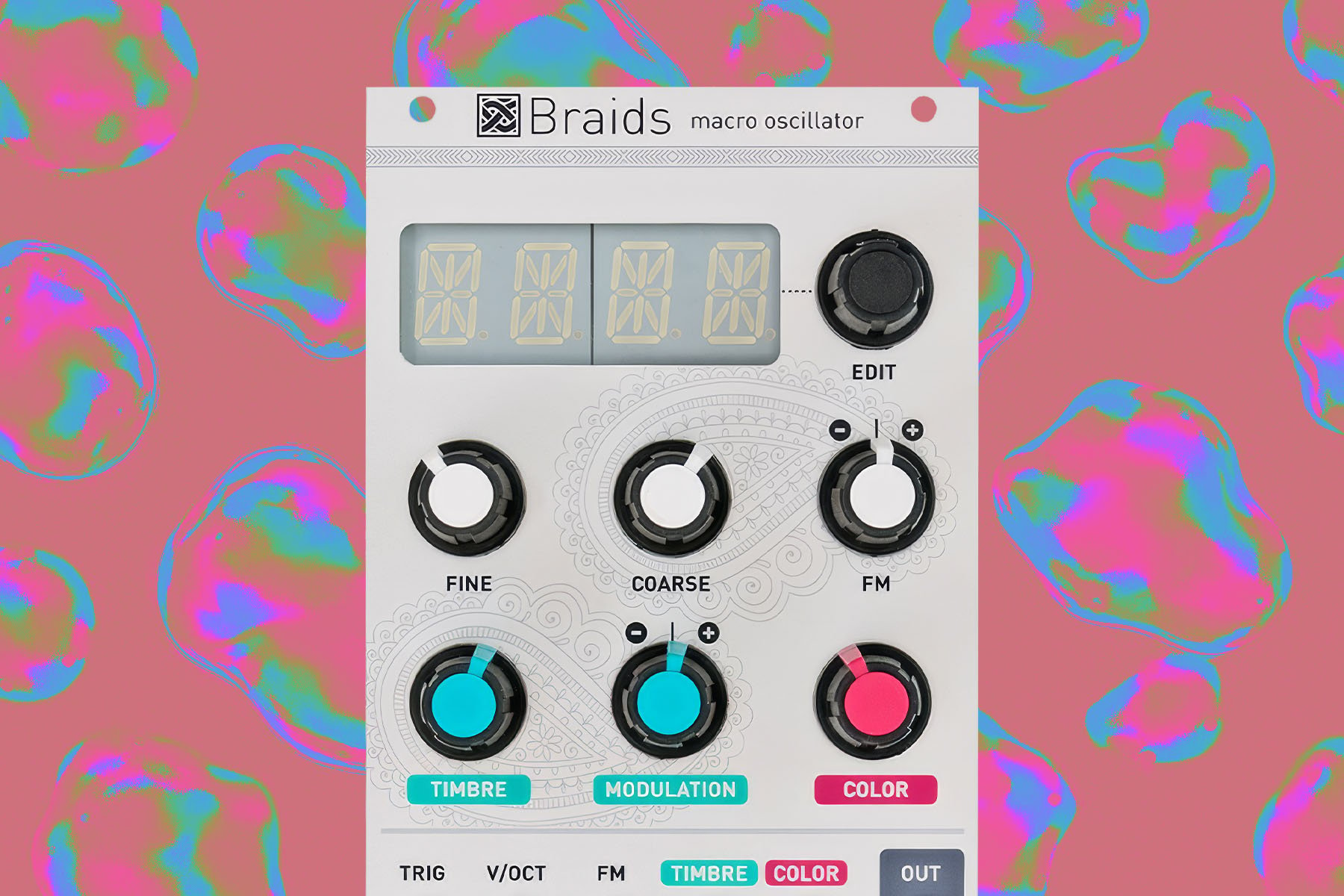

Challenging the Norm: ZIPI

Since then, there have been several attempts to build upon MIDI. Despite its obvious power and utility, as MIDI's standard features began to solidify, there was natural resistance to some of its inherent quirks. Some musicians were perturbed by the relatively low resolution of many data types (often, only allowing seven bits of resolution, for a total of 128 potential values for associated parameters). Others were bothered by clumsy implementations of timbral control for polyphonic instruments, which could lead to less-than-satisfactory expressive control. Experimental electronic musicians were also perturbed by MIDI's seeming reliance on the concept of the "note" as a basic unit of music, and sought a less keyboard-based and event-based means of inter-device communication.

ZIPI logo, from Zeta/CNMAT/G-WIZ Proposal for a New Networking Interface for Electronic Musical Devices

ZIPI logo, from Zeta/CNMAT/G-WIZ Proposal for a New Networking Interface for Electronic Musical Devices

One of the first substantial attempts to create such a protocol was 1994’s Zeta Instrument Processor Interface (ZIPI), proposed by Keith McMillen of Zeta Music Systems, Inc. and Gibson Western Innovation Zone Labs (G-WIZ) in collaboration with UC Berkeley's Center for New Music and Audio Technologies (CNMAT). It aimed to improve upon MIDI’s need to daisy chain instruments together by running on a star network with a hub in the center. ZIPI used new messaging systems based on David Wessel and Matt Wright's Music Parameter Description Language (MPDL) rather than MIDI events, and could deliver much more complex directions including modulation, envelopes, and instrument-specific messages—as opposed to MIDI’s generic program change, volume and pitch messages, and relatively low-resolution CC messages. Additionally, MPDL promised to provide the possibility of performing per-note timbre changes for polyphonic instruments—a feat difficult to achieve with MIDI since such important messages as pitch bend, for instance, equally affect all notes on a single MIDI channel. But though ZIPI seemed on paper to offer more capabilities than MIDI, it never reached a final state of development, and no ZIPI-capable devices were commercially produced.

ZIPI, though, was not a complete failure. In 1997, former MPDL and ZIPI developer Matt Wright, along with Adrian Freed, unveiled the Open Sound Control protocol, or OSC. It was much more flexible and easier to organize, providing an open-ended naming scheme and tremendous flexibility in organizing parameters of sound. OSC is still widely in use today—enjoyed for its remarkably high resolution, high degree of customizability, and its inherent ability to send data via network connections. Due to its open-ended nature (which often requires a certain amount of purpose-specific setup), OSC is not as immediate or widespread as MIDI; but it is still supported by many instruments and software applications such as Max/MSP, Max for Live, Pure Data, Supercollider, and others. While not typically used in most commercial music production settings, it is widely used in academic and experimental "computer music" contexts.

MIDI Polyphonic Expression

Eventually, though, instrument makers and developers found ways to address many of MIDI's purported shortcomings within the protocol itself, leading to the definition of a control standard now known as MPE, or MIDI Polyphonic Expression. MPE builds upon standard MIDI implementations, ultimately enabling use of multidimensional controllers that can manage multiple parameters of every note from even a single keypress. As noted above, critical control parameters in MIDI such as pitch bend messages and CCs used to control timbral parameters act upon an entire MIDI channel all at once: so, if playing a polyphonic instrument with a single MIDI channel, there is typically no way to separately affect the amount of pitch bend or timbral change for each note independently of one another.

Sensel's Morph, an affordable, MPE-capable multi-touch controller

Sensel's Morph, an affordable, MPE-capable multi-touch controller

MPE circumvents this problem by placing each played note on its own separate MIDI channel—opening up the potential for per-note independent expression for any musical parameter. Naturally, this begins to reach beyond the user interface of most controllers: because the position of sliders and knobs on a typical controller has nothing to do with the individual notes played, instrument designers have begun creating novel controllers which produce a broad range of control messages from the playing surface itself. Such devices include the Roger Linn Linnstrument, ROLI Seaboard, Keith McMillen K-Board Pro 4, and Sensel Morph (now discontinued)—all of which provide starkly minimalistic physical designs with rich expressive potential. By deriving tonal control from physical interactions such as pressure, attack and release velocity, and the finger's X and Y position on the touch surface, these controllers allow control of multiple aspects of a sound from a single touch.

Black Corporation's Kijimi controlled by Keith McMillen's K-Board Pro 4

Black Corporation's Kijimi controlled by Keith McMillen's K-Board Pro 4

Of course, this clever note-per-channel strategy for control requires instruments that can recognize it—and development on that front has been somewhat slower than in the realm of controller design. For the first couple years of its use, MPE could only be realized with software such as Bitwig or Apple's Logic production environment. Luckily, with the standardization of MPE in early 2018, instrument developers have been empowered to explore this new protocol's potential. Now, instruments such as the Black Corporation Deckard's Dream and Kijimi, Modor NF-1, and even modular devices such as the Endorphin.es Shuttle Control and Expert Sleepers FH-2 allow for MPE experimentation in the hardware realm. Developments on that front seem only to be accelerating—and it is exciting to think of what the future may hold.

Looking Forward: MIDI 2.0

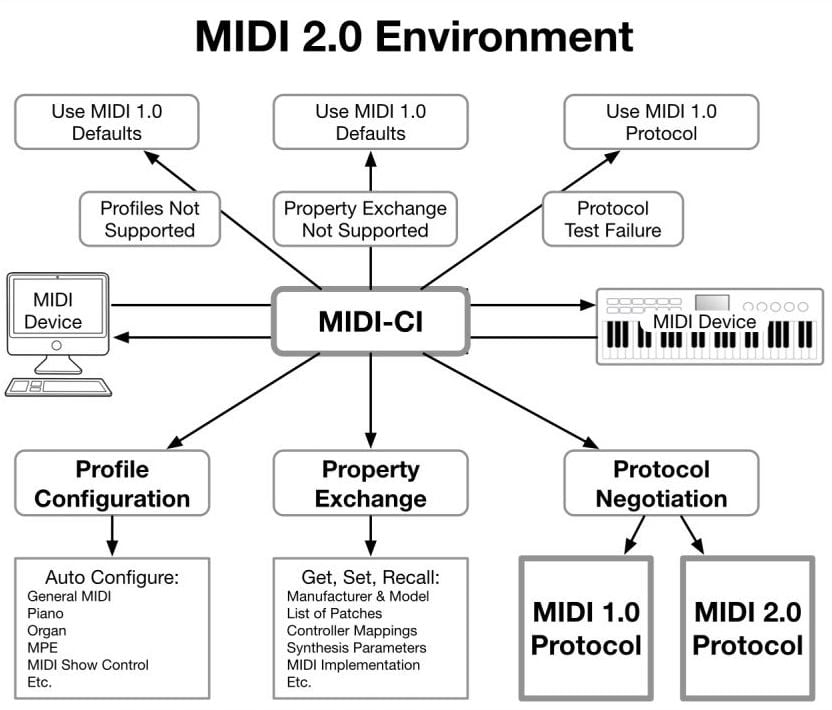

Of course, the latest development in MIDI is MIDI 2.0, originally referred to as MIDI HD since its conception back in 2005. Officially announced in January 2019, MIDI 2.0 updates MIDI with new DAW integrations, increased expressive control, tighter timing, and more. Happily, MIDI 1.0 and 2.0 will be effectively interoperable, and MIDI 1.0 will not be thrown to the curb as a result of 2.0's development.

MIDI 2.0 environment, from MIDI Association press release

MIDI 2.0 environment, from MIDI Association press release

MIDI 2.0 promises a ton of improvements and optimizations over the existing protocol. Some of the most obvious and exciting changes involve expanded capacity for per-note expression and dramatically increased control resolution: note velocity, for instance, will now be 16-bit, while pitch bend, modulation, and all CCs offer an astonishing 32 bits of resolution: meaning a sudden jump from 128 discrete steps of resolution to over two billion steps...a change with huge implications for user interaction and instrument design as a whole.

MIDI 2.0 also provides support for the re-mapping of pitch control—meaning that in theory, constructing any type of scale on any type of playing interface is possible. Combined with inherent support for per-note pitch bend and modulation, this means that we might take a huge step toward easy exploration of non-standard tuning systems...a feat currently possible, but somewhat difficult with many MIDI-related setups.

Aside from these obvious advantages, MIDI 2.0 additionally opens up a means of bidirectional communication between devices: via a concept called Property Exchange, devices can let one another know any number of details about their inner workings, ranging from preset names/numbers to the nature of their own internal parameters. This includes the possibility for devices to communicate to one another about what they are and how they work, so instruments and controllers with common mapping strategies could theoretically map to one another automatically. Additionally, this can enable the possibility for automatically constructing real-time Graphical User Interfaces on a computer without the need for specialized, instrument-specific editors.

Of course, there is much work to be done before MIDI 2.0 becomes a widespread reality—but given what we already know, it seems to promise increased ease of use, higher resolution, and considerably greater general flexibility: all quite welcome changes.

Originally emerging as a solution to a practical problem, MIDI has gradually evolved into a considerably more flexible and expressive tool than initially seemed possible. MIDI represents an all-too-uncommon act of benevolence in the entire music industry, where competitors worked together to provide a means of making things better for musicians and for one another. MIDI has since proven to be remarkably adaptable: MPE, MIDI via Bluetooth, the emergence of 3.5mm MIDI connections, and countless other developments are a testament to its ability to continually adjust to the changing needs of musicians. And though the last decades have seen many twists and turns, the future seems rife with potential...so here’s to many more years of MIDI magnificence.