When it comes to working in the box, few things get us more excited than working with Cycling '74's Max/MSP. Whether it's exploring niche synthesis methods, creating Max for Live effects and instruments, or building interactive environments (remember our Type to Rhythm Machine?), we're big fans of the real-time patching power of Max. After all, most of us here are musicians first and foremost—though we may like to dabble in code from time to time, Max has the distinct advantage of being able to get working results fast that fulfill our musical, visual, or general creative needs.

One specific subset of Max programming revolves around the gen family of objects: a similar patching-style environment with some distinct differences and lower-level advantages compared to typical Max patching. These days there are gen objects for working with video, OpenGL textures, and standard Max event data—but it all started with gen~ for working with audio. There's a much more limited set of objects, referred to as operators in this context, within gen~, but in some ways, the possibilities are greater. And of course, gen~ patches can be used among standard MSP audio objects in Max patches and wrapped up with poly~ or MC for easy polyphonic and multichannel applications.

Some folks have felt that getting into gen~ can be a bit opaque, but the publishing of the book Generating Sound and Organizing Time aims to change that. Written by Graham Wakefield, the creator of gen~, and Cycling 74's Gregory Taylor, GO Book 1 is jam-packed with examples and inspiration for making music and sound with gen~. Even if you're fairly new to Max as a whole, this book offers some incredible perspectives for thinking about audio differently and showcases the power and malleability of gen~ patching.

We've recently added GO to our catalog of books on electronic music and synthesis, so we thought it might be a great opportunity to chat with Graham and glean some insights from the person who conceived gen~ in the first place! In addition to talking about the book, we cover some general philosophies about working in gen~, and shed some light on some of the ways that you might be able to take your patches with you wherever you go!

To coincide with this interview, we went through some extra effort to take some of the things we learned from GO and embed them on this page as interactive examples of the power of gen~. Thanks to Cycling '74's RNBO, you can take select Max patches, including things made in gen~, and export them as compiled code to a variety of destinations, including Javascript and the Web Audio API used by most modern web browsers on computers, smartphones, and beyond. You'll find a few of these sprinkled throughout the interview, hopefully enticing you to grab the book and see what gen~ is all about for yourself!

Interview with Graham Wakefield

Jacob Johnson: Hi Graham! Thanks so much for spending some of your time with us. Let's start by talking a bit about your background: When did you first begin exploring music, art, and sound? What was your first introduction to electronic music, and then specifically computer music?

Graham Wakefield: I started pretty early with an outside-in perspective on music & technology. By chance I saw a Casio SK-1 when it came out (1985) in a local electronics shop when I was 10 and I thought the idea was brilliant: that you could make music with any sound you can find, unlike all the usual keyboards with a predetermined set of very similar sounds. I built up collections of sample sources on tape and played that SK-1 to death. Though I failed to really fix it, in the attempt I did accidentally find some of the amazing circuit bent stuff it can do. So for the next few years I was picking up whatever bits of equipment I could from local charity shops (thrift stores) and so on, and hacking at them with high-school electronics naivety. I grew up in a small town in the North of England in the 1980’s, there were no synth shops – it was old amps, tape decks, the occasional EQ.

[Above: various Casio SK-1 keyboards; images via Perfect Circuit's archives]

My first computer was a second-hand Amiga 500, with the OctaMED tracker and a wave editor from a hobby magazine disk. From that point I was recording stuff every night, mixing down onto cassette overdubs with all the other stuff. I had to figure everything out myself as there was nobody to ask, no internet, hardly any books; but I also think that the situation made it a lot easier to be open-minded and exploratory, and that has really shaped everything I have done since.

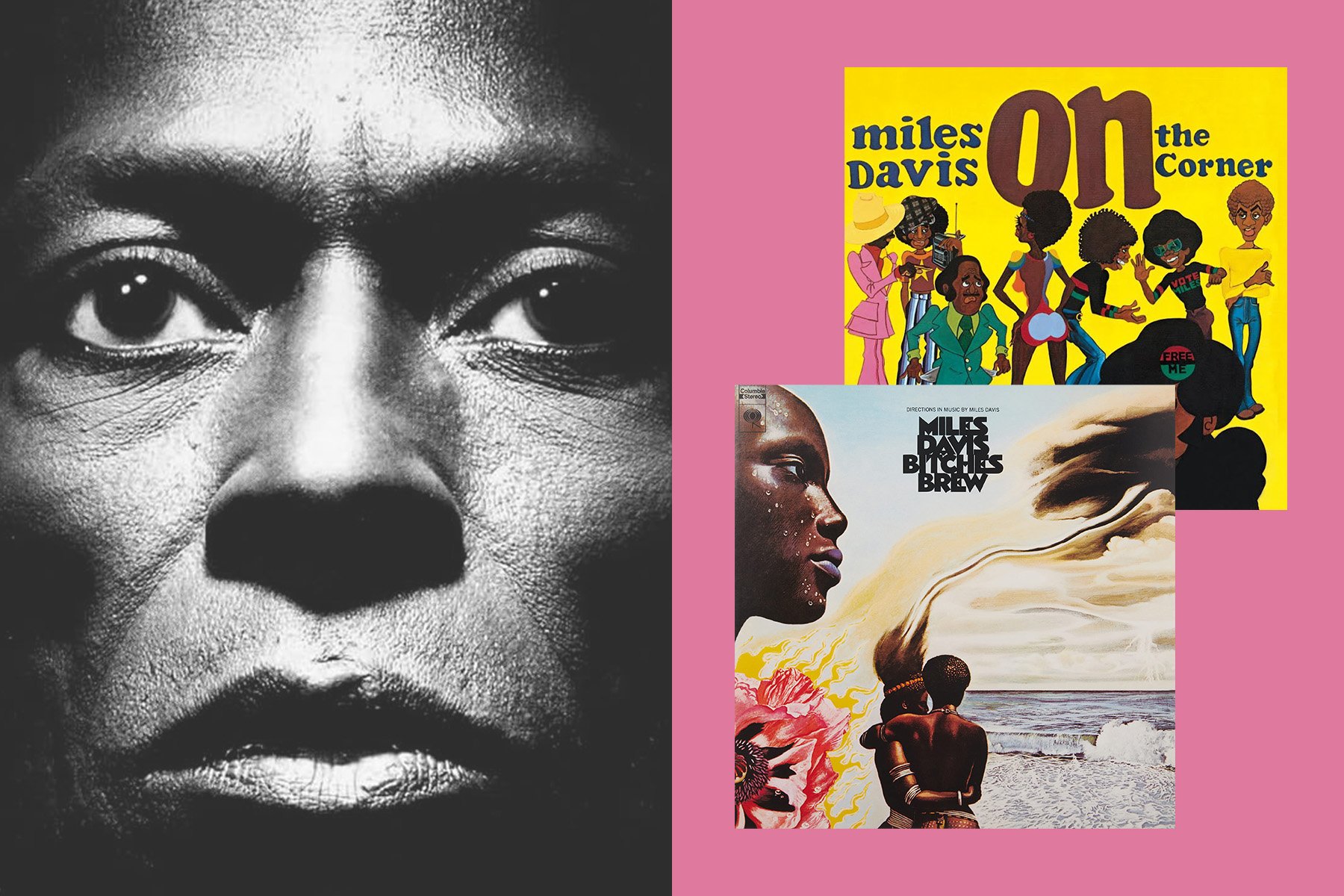

A few years later I was gigging in clubs with a couple of Amigas, a mixer and a delay pedal. It was the early 90’s, drum’n’bass was breaking out (a highlight was playing the Que club on the same setlist as Grooverider). I had a PC but still preferred the Amiga because it was so performable live – there was a lot that it could do in real-time that PCs didn’t catch up with for many years. The PC audio software at that time was also mostly trying to emulate a pro-level studio, which to me seemed a lot less creatively open, useless for performance, and kind of a missed opportunity for what a computer might do for sound.

JJ: What led you to discover Max? Compared to other computer music platforms and programming languages, what did you find the most captivating about Max?

GW: A few years later I was devouring books on the deep history of electronic and computer music, and the studios and university labs in which these things happened. Soon I was doing a Masters in Music at Goldsmith’s College University of London (thanks to Katherine Norman for taking my application seriously) and in no time I was deep into Max/MSP. Almost everything that had seemed to me to be frustrating or limiting about most software I could bypass by building my own thing in Max. The documentation – help files, example patches – are an amazing education in themselves. And anything I was curious about I could dig into more deeply, and build into complex generative and interactive systems.

JJ: In your brief bio at the start of Generating Sound and Organizing Time, it's stated that you created gen~ while pursuing doctorate studies at the University of California Santa Barbara. What prompted you to create this new subset of tools within Max?

GW: Yes – I got really interested in microsound – Curtis Roads’ book had just come out, there was a real focus on it in the mid 2000’s – and I went to study with him at UCSB’s Media Arts & Technology Masters and PhD programs. How this led to gen~ could be a little technical but I’ll try to keep it as short as I can. At the core it was a question of efficiency versus open-endedness.

Audio signal processing is inherently tough on the CPU – we have to run an accurate algorithm tens of thousands of times per second in sequence, and the CPU doesn’t like surprises. That’s why the parts of software that process audio are usually written in a language like C/C++, specialized to a specific task, and compiled to a machine code library (such as a VST plugin or Max/MSP external) to make them as efficient as possible. So, I followed advice and learned to code C++, starting by building some externals for Max.

But once code is compiled you can’t modify the internal structure of what it does. That’s why there are now tens of thousands of Max externals, each one doing a specific thing. I wasn’t interested in building something with a fixed structure. Whether improvising in a performance, or just working in a studio, we are often responding to unexpected moments that take us off in new directions. I wanted a way to explore a seemingly endless space of algorithmic possibilities for arranging and specifying tiny fragments of sound. But C++ was always asking me to decide things in advance – to optimize code performance by restricting what can happen.

So instead I embedded a flexible programming language (Lua) into the audio process of a Max/MSP external. The audio of every microsonic grain could be generated by a completely different signal processing algorithm, and placed in time with (sub-)sample accuracy alongside others. There might be loops scheduling trains of grains, and other loops scheduling the schedulers, and so on. It was a runtime system so you could insert and run more code on the fly, for live coding. Or you could write a genetic algorithm that would define, mutate, and run new granular signal processes by itself. It was incredibly open-ended and pretty expressive. However I kept hitting the CPU limits of running audio signal processing in a scripting language.

The only way I could see to get the best of both worlds – efficiency with open-ended structures – was through dynamic compilation. That means creating and integrating new bits of efficient machine code in the moment that they are needed. Actually dynamic compilation has been researched in computer science for half a century, but at the time it hadn’t been widely used for audio software, so that’s what I did for my PhD. Pretty quickly it wasn’t just about microsound anymore; I found that it had huge implications for what you can do with most audio signal processes. I’d also recently been hired by Cycling ‘74 as a part-time developer for Max/MSP, and with the support of David Zicarelli and Joshua Clayton, after a few years this research project became gen~.

Patch 1 - Polyphonic Complex Oscillator

[Playback issues? If using a mobile device make sure silent mode is off. If all else fails, try refreshing and waiting a moment or try another web browser]

JJ: Can you explain some of the new patching opportunities that gen~ brings to working with audio in Max? How is it similar to or different from working with traditional MSP audio objects?

GW: The basic picture is this: you can add a gen~ object inside any Max or RNBO patcher, and it works more or less like any other audio object, except that you can double-click to open it up and completely rewrite the structure of what it does. You can do this while it is running: every time you edit the gen~ patcher, it converts the contents to efficient machine code, and you hear the results immediately.

The key thing to know is that, unlike a regular Max or RNBO patcher (or indeed most audio software), the entire content of a gen~ patcher efficiently runs one sample at a time. That means you can have a feedback loop as short as a single sample. This is important because single sample audio feedback loops are at the heart of the majority of interesting audio signal processes – oscillators, most filters, and many other audio-rate processes. Let’s say you’ve played with analog synths before and love the sound of feedback FM, where you route the output sound of the oscillator back into controlling its frequency; and especially what happens when you put a filter in that feedback path. Or maybe you want to try putting a shift register there. Without an environment like gen~ that allows single-sample loops, you’d have to go and learn C++ to explore these kinds of things.

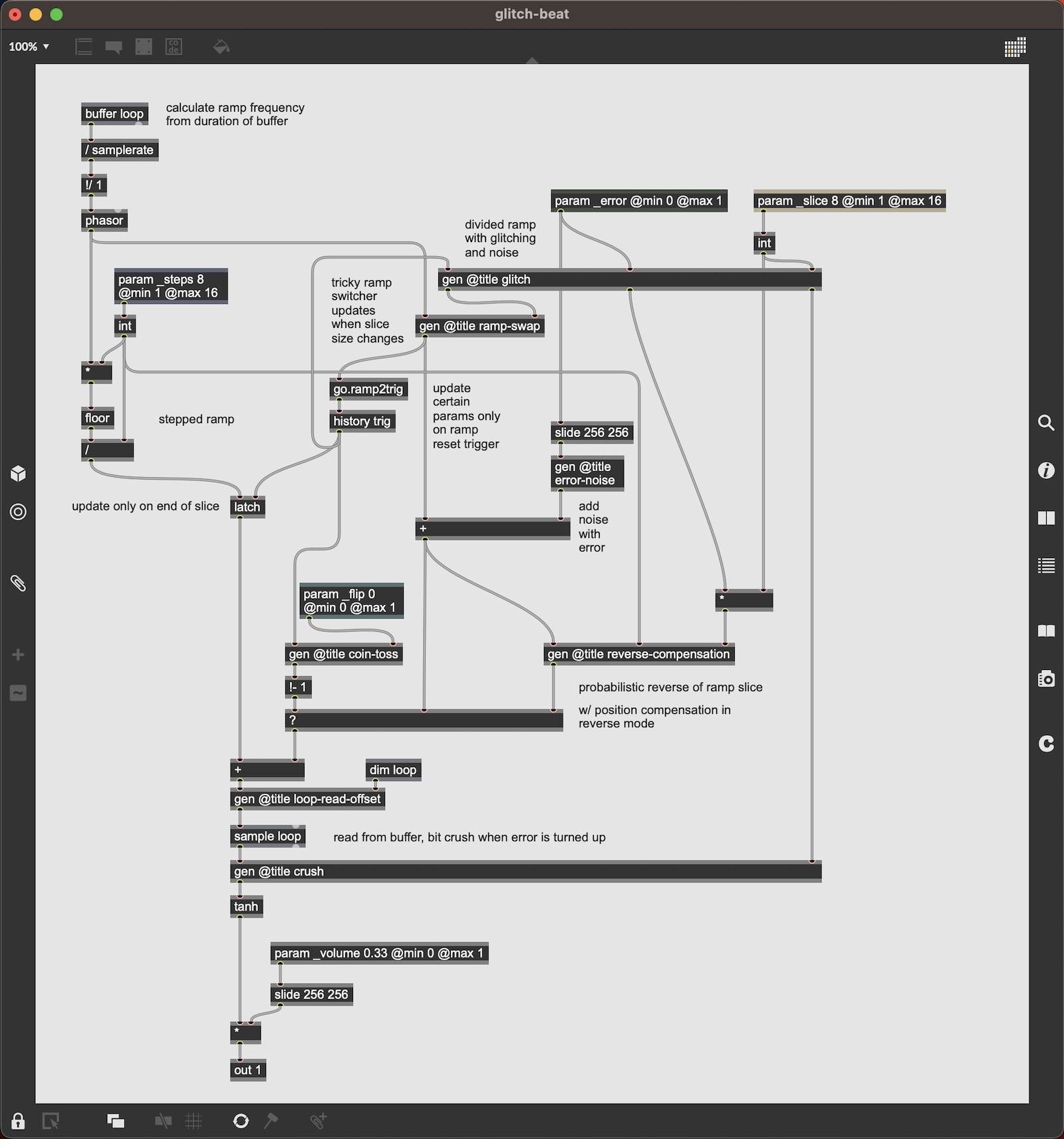

[Above: a screenshot of the gen~ patch used inside of the Beat Slicer example]

The reason why most other software doesn’t run one sample at a time is a little technical, but boils down to the same efficiency/flexibility tradeoff. Take a typical effects chain in a DAW: your CPU is jumping in and out of the fast pre-compiled bits of plugin code according to their order in the chain. This jumping in and out is flexible – it lets you rearrange the effects in the chain – but it would also be very expensive for the CPU if it was done at sample rates of tens of thousands of times a second. Instead, typically audio software passes audio between plugins in chunks of time, sometimes called “block size” or “vector size”, which is usually in tens, hundreds or even thousands of samples. The smaller you make that setting, the higher your CPU load goes. That setting also effectively limits how short an audio feedback loop can be. If it went right down to 1 sample your CPU would go crazy. But with dynamic compilation there is no need to be jumping in-and-out; the entire chain can become a single piece of machine code, and there’s no need for chunks of audio at all.

We think that patching at the sample-by-sample level also makes a lot of things easier to think about. For example, it simplifies the set of ‘built in’ objects. Whereas Max has thousands of audio objects, each one using a custom piece of C code to do a specific thing, gen~ needs only about 100 built-in objects, and you can pretty much make anything you need from , building up your own library of reusable patches and subcircuits.

Working at the sample-level also means you can experiment more freely: you can decide you want to crack open a filter and put something unusual like a bitcrusher inside its resonance feedback loop. Just like Max, the audio just keeps running while you’re patching, so you can try out ideas like this really freely and hear what they do. If a wild idea didn’t work, it didn’t cost you days of development. But if it did work, you can use it immediately.

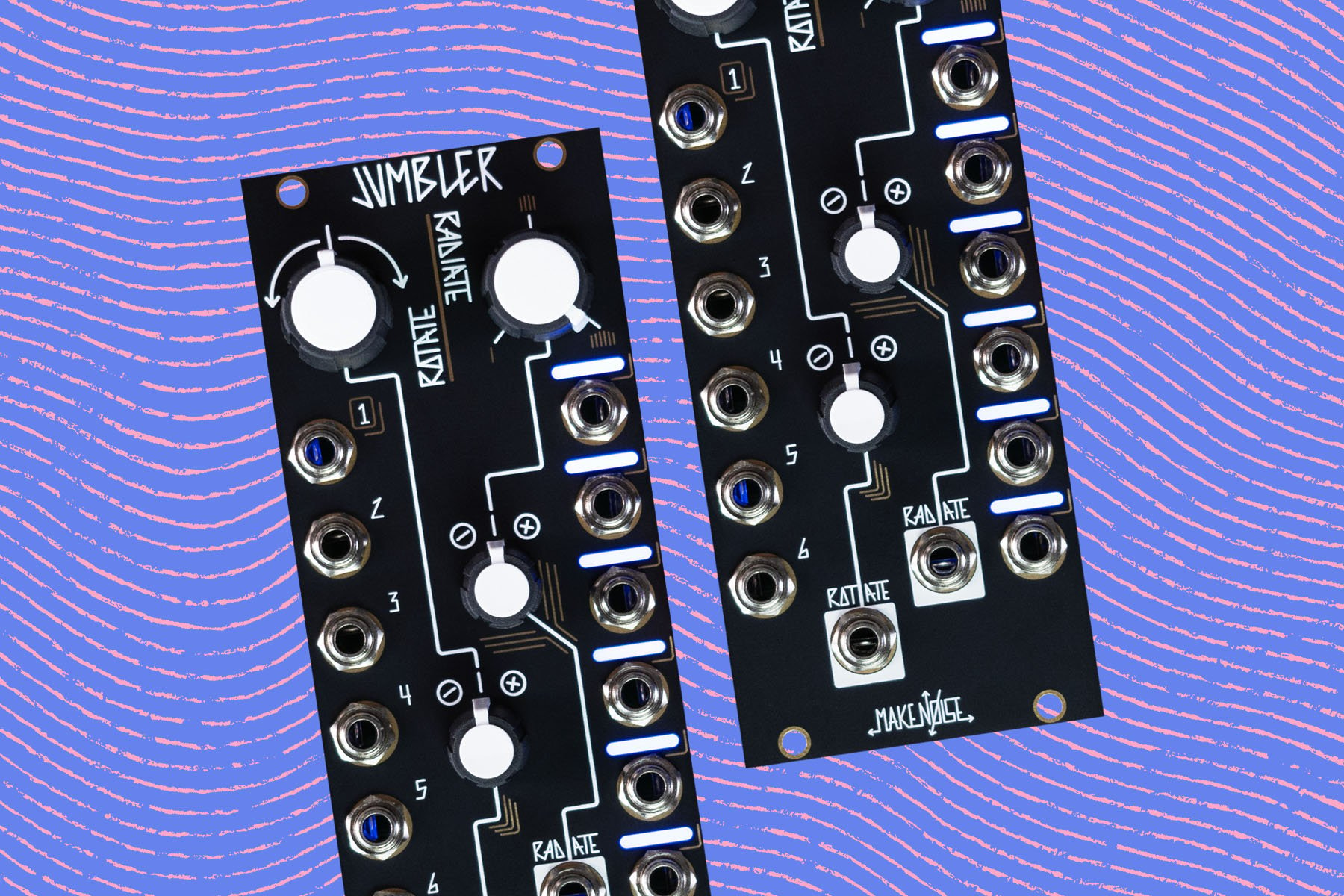

You can also share it with others – lots of people have shared lots of gen~ patches on the Cycling ‘74 forums and elsewhere. As far as I know, every single patch written in gen~ since its launch over a decade ago will still work today. You can embed it in a Max4Live device. And since you can export the C++ (whether from gen~ in Max or gen~ in RNBO), also you can turn it into a VST, put it on a Raspberry Pi, make a Eurorack module, etc.

JJ: You co-wrote Generating Sound and Organizing Time with Cycling '74's Gregory Taylor, who also wrote the wonderful Step By Step book. What was it like collaborating with Gregory and the rest of the Cycling '74 team on this book?

GW: I spent a few years as a Cycling ‘74 employee so I know most of the folks there very well, and they really are lovely people. It hardly ever felt like a company, just a group of really creative people dedicated to the same kinds of things. Gregory is an absolute delight to work with - no matter what the topic he always has some unexpected perspective or obscure yet fascinating detail to bring, if not a completely unexpected personal anecdote! It turns out we share a deep love of many of the same kinds of literature, obscure music, fascinations with the evolution of languages, and so on. He’s a brilliant teacher and a good listener, and incredibly patient with me!

JJ: There are so many topics discussed across GO's 10 chapters. Even though I've read through the book from start to finish, I still go back to the earlier chapters and uncover more inspiration as I work through examples. As broad as it is, was it difficult to sketch out the iterative "gen~ curriculum" that is built over the course of the GO book?

GW: Glad to hear it, we really hoped the book would work in this way – and glad to hear that you are working through the patching!

I’m strongly influenced by the Seymour Papert model of computing education, where we teach ourselves about a subject through a conversational process of making things within an interactive “microworld” (so we shape the system, rather than the system shaping us). That way there’s no cold distinction between theory and practice – they are always informing each other.

Gen~ is a microworld for exploring audio signal processing, and the GO book is supposed to be a helpful companion. So we tried to give it a structure that pulls in a lot of interesting ideas and methods with a progressive flow of complexity, but it is loosely arranged around bottom-up kinds of signals or transformations (such as ramped signals, stepped signals, signal shapers, unpredictable processes, audio-rate modulations, data navigations, etc.) rather than organizing the book by specific theories or application areas.

What the book is really trying to do is show how interconnected they all are, and how you might go about the problems and opportunities that come up as you make your own. Often a topic starts from a question implied by the previous topic – maybe a problem to be solved, or a possibility to explore – and how you might go about it. As much as possible we cross-reference from one chapter to another to make it clearer how even the most advanced parts are built out of a few fairly simple circuit-concepts or technique-patterns – “things to think with” – that come up again and again in the microworld of audio signal processing.

JJ: While you can write text code with GenExpr and Codebox, one thing that I appreciate about the book is the emphasis on building out gen~ patches with objects rather than text. I'm someone who got interested in computer music and DSP through Max first before exploring code in more traditional programming languages... which is a perspective I think a lot of other readers might share. Besides the occasional For Loop and other scenarios where Codebox is required, what's your philosophy on patching with objects as opposed to writing out code? Is it purely visual, out of continuity with the rest of Max, or something else?

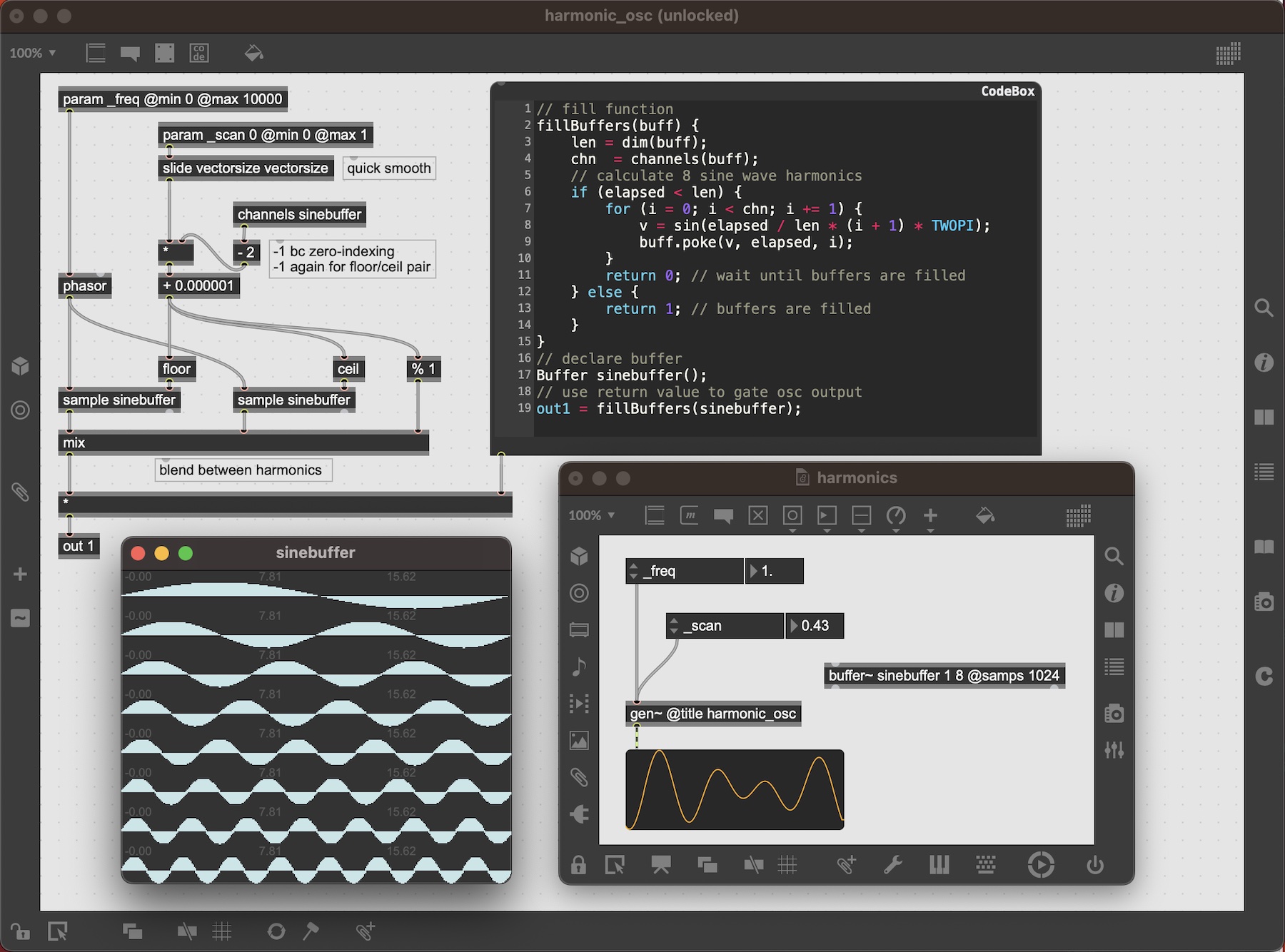

GW: Yes! We have seen this misconception in the community that somehow writing code in text is more proper or more efficient. Neither of those are true. We only use textual code in the book for the handful of situations where a problem can’t be solved by visual patching. Usually that’s because it needs some kind of iterative `for loop` or an infrequent conditional `if/else` section.

[Above: using Codebox to elegantly fill an eight-channel buffer]

If you’re already a coder you might find that working with codebox feels more comfortable, but even then there are some reasons to prefer patching. First of all, using code in gen~ using code does not make your patch more efficient. It might actually lead you toward habits that can be worse for efficiency – such as the temptation to put a lot of code inside if() blocks rather than finding an alternative “branchless” solution that will actually be better for the CPU. With visual patching you can see the structure of a solution far more clearly – such as revealing feedback loops that would be implicit in code. There are far fewer ways to make a syntax error or unnoticed typo, and there’s better opportunities to document what your patch is doing visually – you can lay out the objects and cables in a way that makes the signal path clear, annotating them spatially with comments, and structure them with sub-patching that has no impact on performance. That’s really important when you dig up a patch written a couple of years ago and can’t remember how it worked!

JJ: The end of Book 1 foreshadows some of the topics that will be covered in the second book. Care to offer a sneak peek at what readers can expect in the next one? And what are you most excited to talk about in Book 2?

GW: Oh yes there’s quite a bit! In book 2 we tackle some of the more complex algorithms that are often asked about, such as anti-aliased oscillators, up/downsampling, reverberation and so on. Just like with book 1 we’re trying to show how much these reveal the same underlying ideas and circuit patterns, so that even though these topics might have an intimidating reputation of complexity, they can become much simpler when you look at them in a different way. And of course we’re also digging deep into some of the more unusual edges of each of them. We also continue into quite a few of the less mainstream aspects of time domain signal processing that we think deserve more attention, including working with complex polar signals, time-domain analysis, and some generative and biologically-inspired algorithms that we are especially excited about!

Patch 2 - Glitched Beat Slicer

[Playback issues? If using a mobile device make sure silent mode is off. If all else fails, try refreshing and waiting a moment or try another web browser]

JJ: A nice feature beyond just patching with gen~ is the ability to export patches as compiled code. Within gen~ itself you can generate and export C++ code, but now with the release of Cycling '74's RNBO, it's easier than ever to pipe Max and gen~ patches into VST plugins, Web Audio applications, and even portable devices based on the Raspberry Pi. Was this level of portability always a goal with gen~? Isn't it wild to be able to so easily use your patches outside of Max?

GW: There’s a little backstory there. The first version of gen~ I wrote generated virtual bytecode for a compiler called LLVM/Clang, because I assumed this would be the fastest way. But bytecode is not at all human-friendly to read. To help debug a problem I had it generate C code instead, so that I could actually read the code and figure out what was going wrong. The bug got fixed, and I was surprised to find that LLVM/Clang could compile the C code practically as fast as bytecode. So from that point on gen~ generated C/C++ internally. So although code export wasn’t the goal at the beginning of developing gen~, once we were generating C++, exporting it naturally followed.

With RNBO code export has become much richer, both in terms of what it can support and in terms of the range of export targets. RNBO processes audio in blocks, just like Max/MSP, but we were able to incorporate gen~ patches inside of RNBO patches – so anything you make with gen~ in Max now also works in RNBO!

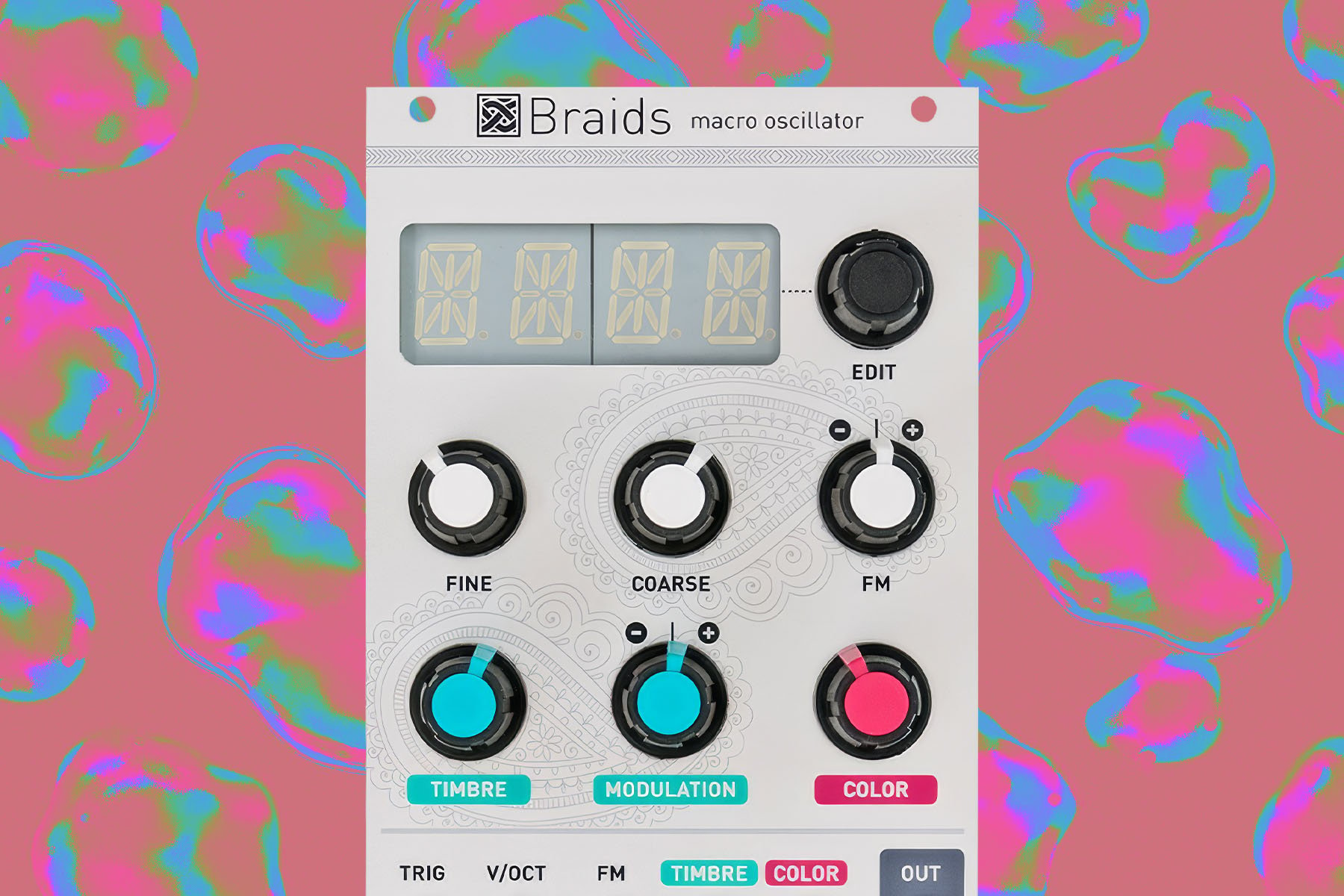

JJ: We're particularly enamored with Electrosmith's Daisy DSP platform, for which you've authored a set of tools called Oopsy that allows you to easily run your gen~ patches on pedals, Eurorack modules, or custom DIY instruments based on Daisy hardware. It's obviously fun to use gen~ on a computer, but do you find it especially rewarding to hold a physical object running gen~ patches? Are there any particularly fun things you've made for yourself with Daisy and Oopsy?

GW: Yes, a couple of years ago I worked with the Electrosmith folks to develop Oopsy as a fast workflow from gen~ to their Daisy hardware. It’s been great seeing how this has been picked up in the community! I have helped out with some workshops that have used this, including the SynthUX Hackathon last year, and advised at a distance on others, from grad student projects to commercial projects like the Modbap Osiris eurorack module (which was prototyped in gen~ too!). I’d like to be more involved with eurorack module designs in the future; I think there’s still a huge potential in mixing together digital and analog processes – making the best of what each can do – in a really hands-on exploratory environment.

The Daisy has a ton of memory that can be used for extremely long and precise delays, looping, complex reverbs and granulation, and other kinds of capture & resynthesis stuff that analog just can’t do. I’ve been enjoying playing around with some complex Trevor Wishart-inspired audio waveset mangling, which happens to play very nicely with analog inputs! For many patches the Daisy can also run at high samplerates and very small block sizes, which opens up a lot of interesting feedback patching options too. A digital phase modulation oscillator fed into an analog wavefolder and back into the oscillator can make for some pretty wild sounds!

JJ: I think there has been a perception that gen~ is a mysterious set of tools for advanced Max users or DSP wizards porting their code into the interactive Max environment. But as the GO book seems to illuminate, gen~ has a lot to offer for anyone regardless of where they are on their Max journey. What advice or tips would you offer to someone who is interested in learning gen~ but is either relatively new to Max or feels intimidated by understanding DSP?

GW: Robert Henke and Tom Hall have both called gen~ a ‘hidden secret weapon’ in Max. We really don’t think that means that it is obscure or difficult at all, quite the opposite! Actually I think that a lot of things get easier once they are explored in gen~, because there are fewer ‘black boxes’ and it’s more flexible for digging into the details that matter to you. As we say in the first chapter, nearly all gen~ patches are made from only about 20 different objects, and all cables are the same type and rate, so there’s not much vocabulary or grammar to learn. Which means you can focus more on the nature of signals and processes, not the software interface.

We wrote the book hoping to show that digital signal processing (DSP) also doesn’t need to be surrounded by a mystique of impenetrability either – the core idea is that a lot of very useful and powerful DSP techniques boil down to a few very simple circuits and ideas that are pretty easy to express at a sample-by-sample level. We walk through these processes with graphs, scope views and diagrams, rather than relying on math notations that not all readers would be familiar with, and more importantly, we try to start from a simple problem or idea and then explore how it behaves and what it can be used for, which then leads on to more interesting patches.

I’ve heard quite a few people - on the forum, on Discord, or just chatting - that they wished they’d dug into gen~ earlier when learning Max. So based on that I’d say try it as soon as you are familiar with the basics of patching! I also recommend digging through the gen~ examples that come with Max - there’s a lot of them from simple to complex, but they cover a lot of bases. Maybe take one of those (or one of the patches that comes with the GO book) that looks like you can follow the basic idea. First add a couple of outlets for debugging purposes so you can look at the signal in different places – sending them to number~, scope~, spectroscope~, capture~, and other objects in the Max patch. Then try to modify it – add some parameters, make it stereo, try inserting a few different operators in different places to hear what happens, etc. Or take two and think of a way to combine them into something new, all inside one gen~ patch. If you get stuck there’s lots of help to be had on the Cycling ‘74 forum, Discord, and Facebook groups! Recently there’s also quite a few folks posting gen~ patching videos on YouTube.

Patch 3 - Strings Into Skies

[Playback issues? If using a mobile device make sure silent mode is off. If all else fails, try refreshing and waiting a moment or try another web browser]

JJ: Speaking more broadly about Max as a whole now, what would you say to someone who is more accustomed to traditional music production tools, whether in hardware like samplers and modular synths or software such as DAWs, as words of encouragement to check out Max and see what it's all about?

GW: Everyone is different, but here’s one take: You can solve some problems by getting a DAW and a few plugins, but often they just don’t work quite how you want them to, and once you hit that wall there’s not much you can do – each piece of software is pretty much a black box. It can solve a problem quickly, but doesn’t translate well to other problems. Max might look like it has a steeper learning curve than getting a few DAWs and plugins, but what you learn is multiplicative (if you learn N things you can apply them in NxN ways). Before long it becomes faster to solve things by patching, rather than looking for a new piece of software. And that knowledge and understanding stays with you – Max patches written 20 years ago still work (unless they depended on unmaintained 3rd party code). Not many 20 year old VSTs still work! And more importantly, the ideas translate to other environments too.

Sometimes the immense range of software available today can create a tendency to overwhelm a project with too many layers of processing and effects – which are likely the same effects that everyone else is using too. Sometimes a work is more compelling when it is stripped down to a more lithe and adaptive idea – and it doesn’t have to be especially complex. People are still going back to the minimal patterns Mark Bell was doing with SND two decades ago, despite how simple the algorithms are. Working with something like Max makes it super accessible to explore these kinds of spaces, and make them uniquely your own. And of course they can still run within Live or anything RNBO can export to, so you don’t have to reinvent the universe. Honestly it’s a pretty amazing time to be doing these things, and the learning resources around are fantastic; you just need to trust yourself and donate some time to it!

JJ: Finally, beyond Max, gen~, and the books, what are you up to these days? Any cool projects you can talk about?

GW: Apart from working on book 2, and generally as a university professor, I’ve been very busy with an ongoing project called “Artificial Nature”. This is a collaboration with Haru Ji that’s been running for more than fifteen years now, exploring biologically-inspired complex systems within a series of immersive and interactive art installations. Our most recent work, “Entanglement” fills a 16x16m room (floor and walls) with a view of an underground mycorrhizal network, connecting with tree roots around you, while in the above ground we had generative AI iterating on possible forms of procedurally-generated trees. It’s a perspective mostly from beneath the earth, where all kinds of complex dynamics and multi-species signaling communications are happening. At the same time it’s musing on non-human intelligence. We humans have been so focused on the visible world of what we can see and use that until very recently most of us didn’t even consider that there might be a vast web of fungus connecting trees beneath our feet. And the same happens with our own minds: we focus so much on rational, conscious, language- and image-based thinking, that we tend to assume that this is what intelligence has to be (even though most of our brain activity is neither conscious nor rational). So while the above-ground parts of our artwork uses generative AI, which confabulates more of whatever humans tend to like to take pictures of, the below-ground is something completely different, a dynamic complex ecosystem of interrelations, a kind of vast decentralized brain.

In this and other Artificial Nature projects I focus on the algorithmic processes, and it’s interesting for me where these overlap with the kinds of algorithms we use in audio signal processing. There’s lots of examples from artificial life research where you can model complex life-like behaviours from a small set of fairly simple rules, which should be a familiar theme for anyone exploring generative music! A classic example here is bird flocking, which can be explained as three regulatory systems for maintaining distance and heading with neighbors. You can easily see regulatory systems underlying biological tropisms as difference-minimizing systems – which also happens to be what lowpass filters and slew limiters are. Similarly you can think of a neural network layer as a lot of mixers fed into waveshapers/limiters, and training is the process of setting the mixers’ knobs. I’m very interested in these kinds of cybernetic underpinnings between biological processes and sonic algorithms. The second book digs into these threads too!