How do you get started making electronic music? And once you get there, how do you start to incorporate elements of expressivity? Is musical expression important in electronic music? Should we think of electronic music performance similarly to how we approach performing with acoustic instruments, or is it a new beast entirely?

We recently teamed up with performer-composer/educator Sarah Belle Reid to give away two Intuitive Instruments Exquis—an MPE-capable MIDI/CV controller that rings in at a surprisingly low price ($299 as of the time of publishing this article). We have talked with Reid in the past about instrument design, her music, and her educational practice; and we felt that this giveaway was a good opportunity to resume our conversations about performative interaction in electronic music.

Reid is an interesting figure in the online synthesizer space: she is a trumpet player/improvisor-turned-electroacoustic-musician whose music leans toward the noisy, chaotic, and unpredictable. Her YouTube videos share a unique perspective on both new and old instruments and techniques, with a consistent focus on building dynamic, real-time interactions between herself and her tools. And, since 2020, Reid has run an online educational program called Learning Sound and Synthesis, in which she provides musicians a firm footing in the history, foundational concepts, and experimentally-minded attitudes that fuel creative electronic music—all using the free software VCV rack as a learning aid. In this interview, we touch on all of these topics and more; find the full interview below.

And of course, head to our contests page before November 26th, 2024 for a chance to win an Intuitive Instruments Exquis—and especially if you're new to the world of electronic music-making, be sure to check out Sarah's YouTube channel and her course, Learning Sound and Synthesis.

An Interview with Sarah Belle Reid

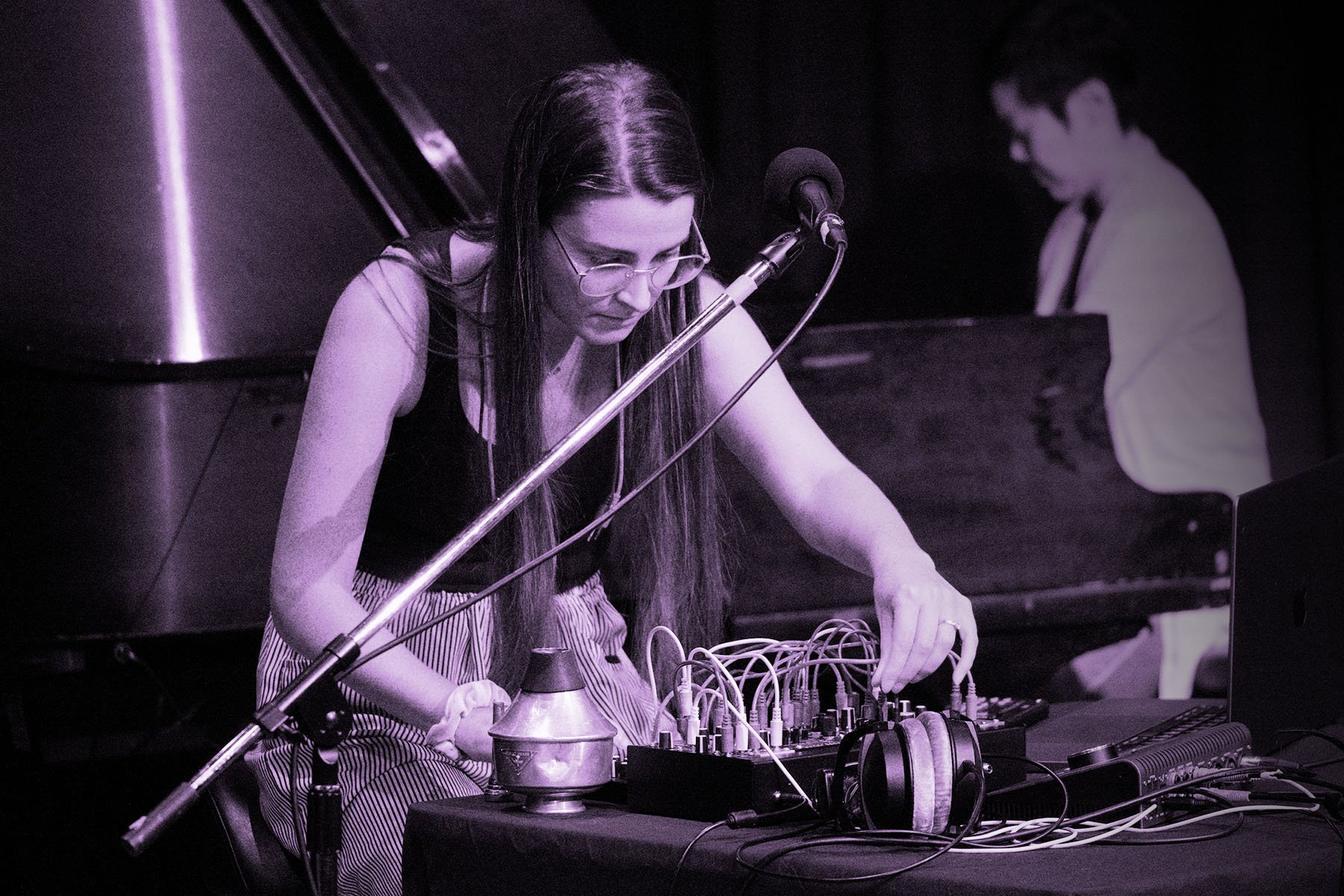

[Above: Sarah Belle Reid at Blaues Rauschen Festival 2024, Germany. Photo credit Etta Gerdes.]

Perfect Circuit: We've talked to you before, so we won't get too deep into your background — but we're curious. We know that you're a trumpet player by training; do you feel that your background as an acoustic instrumentalist has influenced your approach with electronic instruments?

SBR: Yes, absolutely. As an acoustic instrumentalist I think a lot about “activating” sound, and how different physical interactions with my instrument will shape and influence the resulting sound. For example, when you use a soft articulation on a trumpet, the resulting sound will be relatively quiet and round, whereas if you use a strong articulation, the sound is typically brighter and louder. Not only do these physical gestures activate the sound, they also play a big role in shaping and coloring the sound over time.

I approach electronic instruments in a similar way: always thinking about how I can use physical input (whether it’s coming from my trumpet through a microphone, or through a MIDI controller, etc.) to activate and influence the sounds I’m creating.

PC: When you first got started working with electronics, what were your first steps?

SBR: I was first introduced to the world of electronic music through physical computing and instrument design. I was doing my grad studies as a classically trained trumpet player, and had the idea to build an electronic sensor-based device that could attach onto my trumpet to turn it into an electronically augmented instrument.

My first steps were playing around with Arduinos and building simple breadboard circuits to make LEDs blink, then gradually adding in different kinds of sensors and controllers, eventually leading to building my augmented trumpet, which I call MIGSI. Even though I was working a lot with electronics at this time, I wasn’t really making electronic music yet—it was mostly a lot of soldering, sensors, and looking at raw data. My next big step was actually learning how to DO something with the instrument I had built… and for that, I realized I really needed to learn the foundations of synthesis and sound design.

PC: Why did modular synthesizers seem like an attractive way to develop your fluency as an electronic instrumentalist?

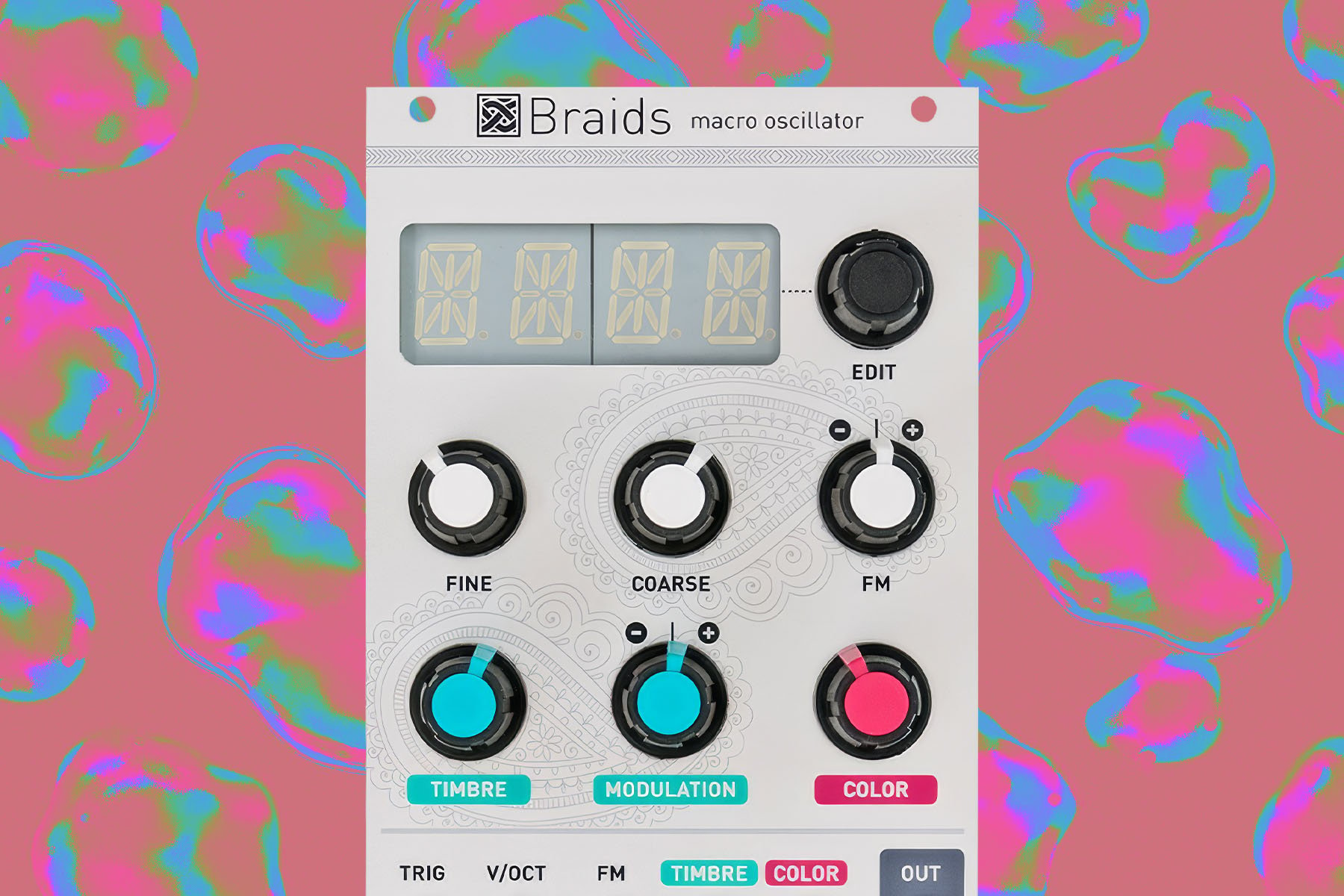

SBR: I think modular synthesizers are the perfect tools for gaining fluency in sound design and electronic music because they force you to look “under the hood” and really learn all the tools, parameters, and connections that go into crafting a unique sound. When you work with a modular synth, you end up learning about foundational concepts that are present in pretty much any synthesizer out there (things like oscillators, filters, envelopes, etc.)—and you have the ability to customize how everything is connected and develop a super personalized instrument.

After I gained fluency in modular synths, I noticed that I was way more comfortable with tools I had previously struggled with (programming languages like Max/MSP and SuperCollider, and even some of my fixed signal path synths all started to make a lot more sense!).

PC: So, what was the first modular synthesizer you personally worked with?

SBR: It was a vintage paperface Serge system in the electronic music studio at CalArts.

[Above: Sarah Belle Reid at the CalArts paperface Serge system, c. 2018.]

PC: Serge seems like a pretty wild starting point! [laughs] What was your approach to learning it?

SBR: It was, indeed! The Serge is an incredible instrument, but it definitely wasn’t the most intuitive starting point. At first, my approach to learning it was very haphazard—it seemed as though the popular advice around how to learn modular synths at the time was to just “plug things in and explore,” so that’s the route I took. Unfortunately, it led me to feeling pretty intimidated and lost for months.

Eventually, I decided to go back to square one and try to relearn everything in a much more methodical way. I broke everything down into the most fundamental pieces possible: oscillators, waveforms, sound, etc., and studied one area at a time until it all started to make sense. This approach really worked for me and I gained a lot of confidence and fluency with modular and synthesis in general.

What I realized for myself—and I’ve seen this in many of my students as well—is that for some folks, “just plug things in and explore” as the very first step in a learning journey can create more confusion than freedom. It’s much easier to intuitively explore and play when you have some sort of foundation in place to help you get started. I’ve brought this approach into all of my teaching as well, always striking a balance between developing vocabulary and technical skills, while leaving room for creative exploration and finding your own way.

PC: You've continued to use modular synths in the years since, of course. So today, how do modular synths fit into your workflow as a musician/composer?

SBR: The way I use modular synths changes depending on the project I’m working on, but my favorite thing to do is to improvise with them. This usually involves setting up a patch that partially responds to my physical input, and partially has a mind of its own (typically through some kind of generative, random, or chaotic structure). I love to think about my instrument as a kind of duo partner, who can listen to me, respond to me, and occasionally steer things in a surprising, new direction.

PC: Do you find that there are any persistent challenges in getting the acoustic and electronic aspects of your work to mesh? Is it difficult to get electronic instruments to "play nicely" with acoustic instruments?

SBR: When I first started to combine electronic and acoustic instruments together, there were a couple of things that really stood out to me:

- 1. Electronic instruments don’t have to breathe (trumpet players do).

- 2. Unless told otherwise, electronic instruments are very good at playing the exact same thing over and over again with no variation (trumpet players aren’t).

I’m obviously joking a bit with number 2, but these ideas play a very big role in how I think about building modular synth patches that incorporate acoustic instruments:

Because trumpet players (and many other acoustic instrumentalists) have to breathe, I found it bizarre to have a synth that just droned on forever. It felt like a duo partner who wasn’t really listening. So, one of the most important ingredients in my synth patches is silence (breath), and ensuring that they can move between sound and silence in many different ways. Similarly, I noticed that acoustic instrumentalists always have some kind of variation in their playing from note to note, even if it’s extremely subtle. So, I always try to give my synthesizer patches that same kind of expression, making sure that each note isn’t always the exact same pitch, articulation, loudness, etc. In my opinion, this creates music that feels more alive and dynamic, and it helps the acoustic and electronic sound worlds to mesh in a more cohesive way.

[Above: a video in which Sarah Belle Reid demonstrates concepts in musical interaction using VCV Rack.]

PC: I'm curious, then—if you're looking for this really specific type of expression or interaction, is there a particular electronic instrument, system, or module that is really important for your workflow?

SBR: The most important tools in my workflow all have to do with interaction and control. When it’s combining my trumpet with my modular synth, my setup always involves an envelope follower with a variable threshold control, so I can derive continuous and momentary control information from my trumpet input. When it’s a purely electronic setup, I always prioritize some kind of fun and expressive controller so that I can physically push, move, massage, and play with the sounds I’m creating.

PC: So of course, then, I'd like to talk about controllers, too, then. How do controllers generally fit into your workflow? How do you think about them?

SBR: Yeah, controllers are a huge part of my workflow. You can play the exact same patch on a modular synth with three different controllers, and you’ll end up with three wildly different results.

My favorite kinds of controllers are ones that give you multiple different aspects of control from a single physical UI element—like joysticks, which combine X and Y motion, or pressure-sensitive touch plates that also generate momentary triggers, for example. What I love about these kinds of “multi-parametric” controllers is that every single physical gesture produces multiple different aspects of control, which can all be routed or mapped to independent aspects of the patch simultaneously—opening up huge potential for creating very intuitive and expressive interactions.

PC: So given that you're thinking about these types of multiparametric control sources, I'd imagine that the idea of mapping is a big deal to you, then. Can you give us a working definition of mapping?

SBR: So yeah, sure—mapping is the process of applying data or control information to one or more destinations (typically parameters of sound). For example, if a knob on your MIDI controller produces MIDI CC 30, you could configure your synthesizer to receive MIDI CC 30 and use it to control the cutoff frequency of your filter. In other words, you’re mapping the data from your MIDI controller to the parameters of sound on your synthesizer. You could think of control voltage in modular synths in the same way—connecting a CV source to a destination is a similar type of mapping.

In that example, the mapping is very direct and one-to-one…but it can be more complex and obfuscated. I personally like to strike a balance between direct mappings and complex mappings in my patches, in order to create more of a sense of collaboration between myself and the instrument.

PC: Can you give us some examples of how a more complex mapping might work?

SBR: One example of a way to make a direct mapping slightly more dynamic is to control something that is controlling something. For example, instead of mapping four buttons on your controller to four specific pitches on an oscillator (which would be a very direct mapping), you could instead map one button to trigger a random voltage generator, and then use the random voltage generator to change the pitch of the oscillator. This way, you still have some direct control (you can tell the instrument when to change pitches), but you relinquish the specific control over what new pitch gets chosen, and let the synthesizer surprise you with its decision.

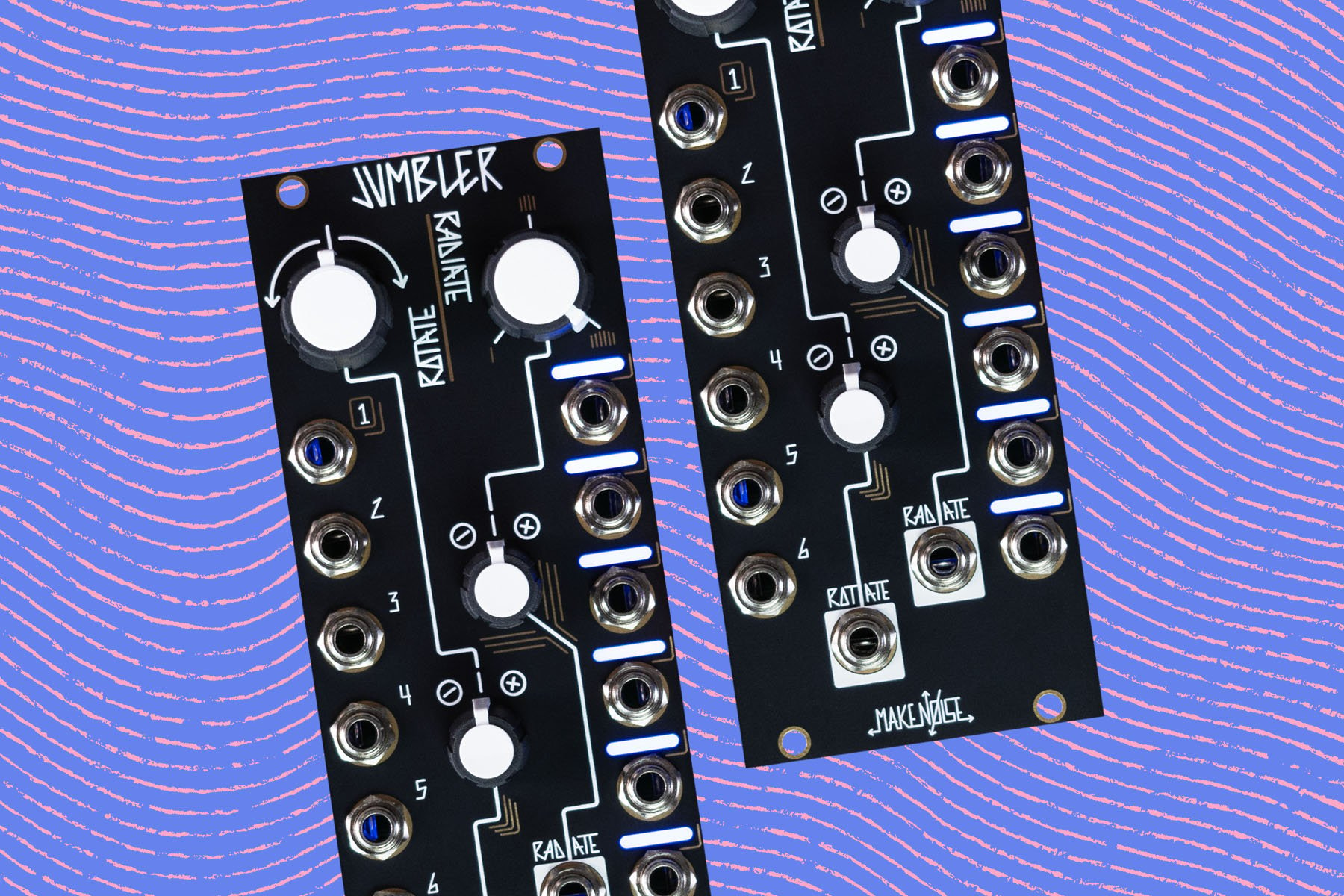

As I mentioned earlier, I’m also a big fan of multi-parametric mapping, in which you use a single gesture or UI [ed.: user interface] control to generate multiple pieces of control information. I use the Make Noise 0-Ctrl a lot, for example, which allows you to generate a continuous pressure CV, gate signal, and three independent fixed voltages for every key press. Sticking with the example I just shared, I love to use a single keypress on the 0-Ctrl to simultaneously send pitch information to an oscillator, trigger a new random voltage which changes some aspect of my patch, and generate continuous pressure control to smoothly modulate the sounds timbre.

Other ideas include conditional mapping (where the routing / behavior of X changes depending on different conditions), or one-to-many mapping (where you send one control source to many different parameters of sound and scale each one to respond differently), or introducing some kind of non-linearity or feedback into the mapping path to distort the relationship between the control source and its destination.

And this is just scratching the surface!

PC: Do you have any examples of how this thinking might play out in your own music?

SBR: I recently finished touring a new piece of mine called Manifold that incorporated a lot of these ideas. The piece is composed for trumpet, Max/MSP, and a small modular synthesizer. There are many sections throughout the piece where the “composed” element is more about timing and interaction, and the resulting sound is entirely generative—different with each performance.

For example, the input from my microphone is analyzed and turned into multiple different control sources: there’s an envelope follower that creates a continuous control source depending on how loud I play, and triggers that are created using onset detection of new notes and gestures.

The triggers are used to do a number of things simultaneously: they dynamically alter the panning of sounds, they trigger new random values which modulate the way the trumpet sound is being processed, and they occasionally trigger the recording and playback of sampled material.

Performing with this setup is so rewarding for me because it truly feels like a duo collaboration between myself and the electronics. I have control over when I want to influence or change the sound world I’m interacting with, but the exact direction the synth / computer decides to go in each new section of the piece is always new and unexpected.

[Above: Sarah Belle Reid at the Buchla 400; image via The Buchla Archives]

PC: Can you point to any precedents that have led you to think about mapping as a critical concept? Do you have any sort of, I guess, historical inspirations that have informed this way of approaching interaction?

SBR: Mapping and interaction are concepts that many instrument designers have been considering and exploring for decades. In a 1983 interview in Polyphony magazine, Donald Buchla said, “I like to regard an instrument as consisting of three major parts: an input structure that we contact physically, an output structure that generates the sound, and a connection between the two.”

He goes on to emphasize the unique opportunity that we have as electronic musicians (and instrument designers) to separate the relationship between input and output on our instruments—a single key press doesn’t have to simply yield a single new note; a knob doesn’t have to output a linear control from 0 to 1; A button doesn’t have to remain mapped to the same control for the entire duration of a performance, and so on.

For me personally, thinking about control mapping as a dynamic and potentially reconfigurable connection between input and output structures is deeply inspiring. Even though these ideas are not new, there is still a lot more that can be done with them. I would love to see more instrument designers in the future go beyond the traditional one-to-one mapping and linear interaction that we commonly see, and to really push the boundaries of what a musical instrument might look like and how we can develop new modes of interaction, expression, and connection with our instruments.

I also think there’s a lot of potential for the development of controllers that don’t necessarily prioritize pitch-based music or assume a specific workflow, but instead focus on giving musicians access to a wide range of multi-parametric control that can be arbitrarily mapped and utilized in whatever way best fits their musical needs. I’m personally quite excited to think of all the new musical directions that might emerge from deeply exploring these questions!

PC: Obviously, the Intuitive Instruments Exquis recently came into your life. What is your take on it so far?

SBR: It’s squishy! [laughs]

Jokes aside—I think it’s a really interesting controller. It’s very sensitive to touch, and the keys offer great haptic feedback. I love that you can get X, Y, and Z (pressure) control from each key press. The isomorphic key layout is nice too because it doesn’t force you to think of pitch in a traditional linear way, if you don’t want to.

PC: Are there any particular ways you've been using it that you find interesting or inspiring?

SBR: The Exquis leverages a lot of the multi-parametric control ideas I was referring to earlier in a nice way. As an MPE controller, you can send out 3 different aspects of control on every single key press, which provides a ton of flexibility. I’ve used a number of MPE controllers in the past, and the main thing that stood out to me about the Exquis is how sensitive the keys are to different physical gestures. You can gently rest your hand on the controller and generate a subtle flurry of activity, or you can really dig into an individual key and explore a wide range of control.

I also really like that it has CV outputs built right into the controller, so you can hook it up to your Eurorack or semi-modular synth without needing a MIDI-CV converter. The CV outputs send out pitch (V/Oct + pitch bend), gate, and mod (which is derived from aftertouch), but it’s important to remember that you can use those signals to modulate any aspect of your synth! I’ve been having a lot of fun running them into modulation inputs on delays and other effects, and integrating them into chaotic / feedback-based patches.

PC: Of course, we know that—in addition to composition and performance—you're also very involved in education. Why is teaching/education so important to you?

SBR: Like we discussed earlier, I struggled a lot early on in my electronic music studies. Early on, I didn’t feel confident in my ability to learn technical things, and as a result I often felt very overwhelmed and discouraged. I’m so grateful that I didn’t give up, however, because my creative practice has grown in so many amazing ways over the years.

One of the reasons why I’m so passionate about teaching synthesis and electronic music is because it didn’t instantly "click" for me early on. It forced me to come up with a lot of creative ways of looking at complicated concepts and breaking them down into simple, bite sized ideas. I’ve also realized over the years that I’m by no means the only person out there who struggles with feelings of self doubt and overwhelm, or who simply finds these topics confusing—it’s more common than you think!

Teaching and education is important to me because it’s a way that I can reach people with big creative goals, who might be feeling a bit overwhelmed or discouraged themselves, and I can help to support them in realizing that they’re more than capable of learning and accomplishing the thing they want to do.

PC: Can you tell us a little bit about your approach to teaching?

SBR: The core of all of my teaching is focused on helping people make more music that feels like a true expression of themselves, and that they’re proud to share with the world. This means that I never push a specific genre or approach to making music onto my students. Instead, we work on building a solid foundation in technical skills, creative process, listening, and musicianship that will allow them to do their own unique thing—with confidence and mastery.

PC: So, what kind of topics do you generally deal with in your classes?

SBR: My signature program, Learning Sound and Synthesis, teaches you everything you need to become fluent in making music with synths and designing your own sounds from scratch. I focus on teaching universal foundations (general synthesis tools, concepts, and techniques that can be applied to any synthesizer workflow), rather than gear-specific techniques. I use VCV Rack to teach the class, because it’s free and accessible to anyone, but students are invited to use whatever instrument(s) or set up they’re most inspired by. Some folks stick to VCV Rack, others expand into building out a Eurorack modular synth, others focus more on semi-modular and keyboard synths.

We start with a deep dive into the history and evolution of modular synthesizers, instrument designers, and pioneering musicians over the last 100+ years. From there, we move step-by-step through all of the most important synthesis tools, techniques, and workflows that you need to master in order to create dynamic sounds, patches, and music with synthesizers—ranging from classic synth leads to ambient soundscapes to experimental generative textures. Once all the foundations are in place, we move into more advanced topics, such as combining acoustic instruments with modular synthesizers, building interaction in your patches, using MIDI, external controllers, and other gear in your setup, and more.

We cover everything from the fundamentals of synthesis and signal routing, to techniques and workflows for musical composition, to how to put together live performances with electronic instruments.

PC: So, what is the structure of the class like? I know it's online—is it mostly video-based material? Or is there a personal interaction component?

SBR: I’ve designed Learning Sound and Synthesis to support people with a range of different learning styles and preferences. For those who have busy schedules and prefer to do things on their own, the full class is available as step-by-step video-based training that you can go through at your own pace, on your own schedule. When you enroll, you get lifetime access to the entire course, so you can really take your time with the material and come back as often as needed.

There are also a number of different opportunities for personal interaction, support, and feedback throughout the course. I host live coaching calls on Zoom throughout the class to support students, offer personalized feedback, and bring in new topics for discussion. They’re also always recorded so folks with busy schedules don’t miss out. And, once you've enrolled, you have access to all future calls, so you can really take your time.

We also have an amazing community forum attached to the class, where students are constantly sharing their progress and ideas, asking questions, forming accountability groups, and supporting one another. We host online study halls, open mics, and other community-focused events. Many Learning Sound and Synthesis students have had their debut live performances at our open mic events, and then have gone on to continue performing all over the country!

PC: So, I gather that due to the live component of the class, LSS is generally organized in cohorts—when is LSS opening back up for enrollment?

SBR: Learning Sound and Synthesis typically opens for enrollment twice per year. The next enrollment period will be in Feb. 2025—folks who are interested to be notified when enrollment opens back up can join the waitlist here.

PC: What other projects do you have on the horizon?

SBR: I’ve got a new record coming out in early 2025 with the incredible multi-instrumentalist / improviser Vinny Golia. As I mentioned, I just finished a year of touring a new quadraphonic work for trumpet and electronics—Manifold—and I’m excited to be heading into the studio soon to record it.

I’m also in the final stages of mixing an electroacoustic opera that I started to compose back in 2019—so stay tuned for more information on that, coming soon! If you want a behind-the-scenes glimpse into all of these projects (and more!) as they come together, check out my Patreon page.

[Cover image: Sarah Belle Reid at Michael Palumbo's Exit Points, Toronto, Ontario, CA 2024. Photo credit Eugene Huo.]